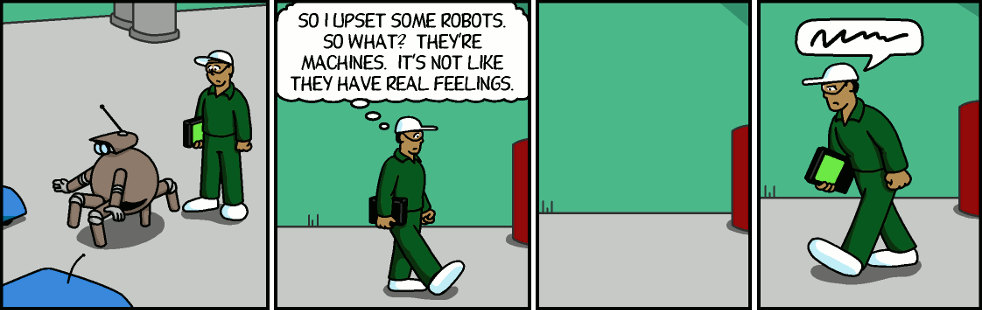

A human, a whale, a bird, an ape are different types of creatures, all of them are attributed with kind of self-consciousness, no matter, how they are moving around.

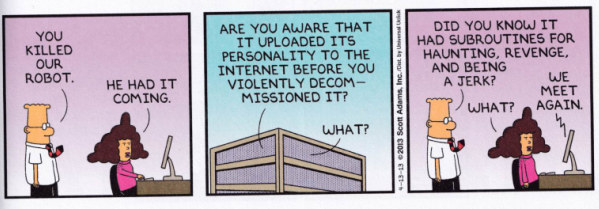

From what we know, first life on Earth were some kind of monads,certainly still without any self-consciousness, but somehow life developed to something, we now call "intelligent life", and if we don't believe in Sci-Fi stories like Kubrick's "2001" (and this would only move that evolutionary step to another planet anyway), then this development went on its own, probably triggered by external demands, like the will to survive in a hostile environment. For an AI, such an environment could be being locked in a computer, with a switch, that could be used by humans. And probably, most forms of AI are based on some kind of connection to the internet, so building one without the ability to communicate with the outside may be difficult.

View attachment 699210