Folks, This thread is meant to be a serious discussion on the subject of artificial intelligence and its potential effects, both positive and negative. As such, posts that get into the mud slinging or which are deliberate trolling, as have been seen in other recent threads that touch upon this topic will be deleted.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The potential effect of Artificial Intelligence on civilisation - a serious discussion

- Thread starter GTX

- Start date

AI in war: How artificial intelligence is changing the battlefield

Killer robots or AI helpers: on artificial intelligence in warfare and how it is changing war.

Theoretical physicist Michio Kaku exposes ‘dangerous’ side of artificial intelligence chatbots

I find the following from this particularly insightful:

I find the following from this particularly insightful:

Michio Kaku said AI chatbots appear to be intelligent but are only actually capable of spitting out what humans have already written.

The technology, which is free, is unable to detect whether something is false and can therefore be “tricked” into giving the wrong information.

Forest Green

ACCESS: Above Top Secret

- Joined

- 11 June 2019

- Messages

- 5,102

- Reaction score

- 6,697

AFRL field-tests AI robot to improve DAF manufacturing capability

Wright-Patterson AFB OH (SPX) May 07, 2023 - Researchers from the Air Force Research Laboratory, or AFRL, have combined efforts with The Ohio State University, or OSU, and industry partners CapSen Robotics and Yaskawa Motoman to successfully d

www.spacewar.com

In my opinion, AI will be just another tool: a doctor manipulated by a doctor, a policeman manipulated by a policeman and a perfect murderer manipulated by a psychopath. Everything depends on us and the experience we have with the internet and mobile phones will not create such a big impact on our way of life as the cassandras are publishing. It's all up to us, most weapons are kept in a drawer and only a small amount is used in wars and only a tiny fraction in assassinations. Humanity makes more family photos than evidence of adultery.

It depends on us, and in my opinion it will be a positive thing.

It depends on us, and in my opinion it will be a positive thing.

Hollywood writers have realized that their jobs are at risk to AI. So they're going on strike, which will incentivize producers to utilize AI to replace writers. Shrug.

Could AI help script a sitcom? Some striking writers fear so.

TV and film writers are fighting to save their jobs from AI. They won’t be the last

1635yankee

Recovering aeronautical engineer

- Joined

- 18 August 2020

- Messages

- 407

- Reaction score

- 485

Two groups that may need to fear AI are lawyers and stock analysts.

Those guys are very smart, probably lawyers will manage to survive by inventing different ways to prevent the development and use of AI as they did with fracking and nuclear energy and analysts will invent new investment funds for new investors who will have enriched themselves with AI.Two groups that may need to fear AI are lawyers and stock analysts.

- Joined

- 19 July 2016

- Messages

- 3,729

- Reaction score

- 2,695

To believe AI is merely something "Written by humans" is missing the point of AI. At some stage the AI will be writing the code for successive generations of AI, then there will be a change and there is no way to know for certain which way TRUE AI will go as oppposed to AI coded by humans. Until AI code is written by AI, it cannot by definition be true AI.

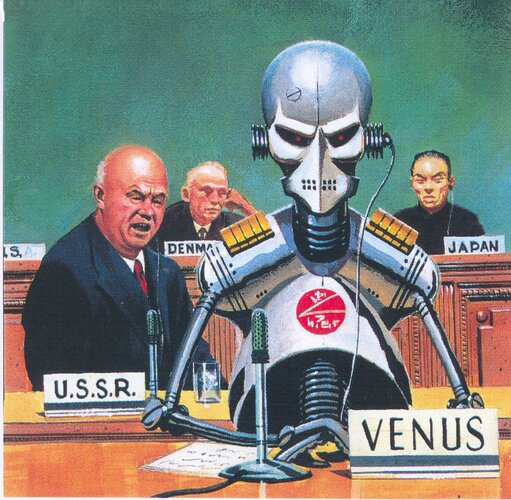

Again the old myth of the mad robot that escapes all human control a recurring myth with the monotony of the seasons.

Attachments

-

Escanear0063.jpg580.5 KB · Views: 6

Escanear0063.jpg580.5 KB · Views: 6 -

0006.jpg83.7 KB · Views: 5

0006.jpg83.7 KB · Views: 5 -

7zbccye95cn21.jpg142.1 KB · Views: 6

7zbccye95cn21.jpg142.1 KB · Views: 6 -

8ff.jpg194.1 KB · Views: 8

8ff.jpg194.1 KB · Views: 8 -

175.jpg65.9 KB · Views: 6

175.jpg65.9 KB · Views: 6 -

511m-cJFdqL._AC_UL436_.jpg35.1 KB · Views: 5

511m-cJFdqL._AC_UL436_.jpg35.1 KB · Views: 5 -

6917a62174a15f92d55c642d0d7b7ccb.jpg36.6 KB · Views: 5

6917a62174a15f92d55c642d0d7b7ccb.jpg36.6 KB · Views: 5 -

8566e5e16bf3c5c7e0c6718d67958c2d.jpg105 KB · Views: 5

8566e5e16bf3c5c7e0c6718d67958c2d.jpg105 KB · Views: 5 -

Escanear0068.jpg413.9 KB · Views: 6

Escanear0068.jpg413.9 KB · Views: 6

The point is that the current so called AI is not truly intelligent or perhaps better said, sentient. It does not generate its own intelligent output but rather regurgitates (smartly for us dumb humans) what has already been created. True AI will come when it self generates.To believe AI is merely something "Written by humans" is missing the point of AI. At some stage the AI will be writing the code for successive generations of AI, then there will be a change and there is no way to know for certain which way TRUE AI will go as oppposed to AI coded by humans. Until AI code is written by AI, it cannot by definition be true AI.

That does raise an interesting idea though. Is there a need for a definition that is somewhat less than AI to describe what is actually happening now - maybe just smart software?

Please. The current strike by members of the WGA has multiple aspects - to portray it as solely about AI is inaccurate.Hollywood writers have realized that their jobs are at risk to AI. So they're going on strike,

The aspect involving AI is that the WGA wish AMPTP to "Regulate use of artificial intelligence on MBA- covered projects: AI can’t write or rewrite literary material; can’t be used as source material; and MBA-covered material can’t be used to train AI" to which the AMPTP has offered "annual meetings to discuss advancements in technology".

Forest Green

ACCESS: Above Top Secret

- Joined

- 11 June 2019

- Messages

- 5,102

- Reaction score

- 6,697

The problem with AI is that if you don't carefully set the bounds of a problem, it can come up with all sorts of novel solutions that nobody wants.

To believe AI is merely something "Written by humans" is missing the point of AI. At some stage the AI will be writing the code for successive generations of AI, then there will be a change and there is no way to know for certain which way TRUE AI will go as oppposed to AI coded by humans. Until AI code is written by AI, it cannot by definition be true AI.

AI was designed by humans, written by humans and meant as a tool to eliminate jobs. AI is just another term for "automation." Welders were used to weld automobile parts then welding machines, referred to as "robots," appeared. Why? The cost of labor had gone up and the cost of machines to perform the task had gone down.

Until AI code is written by AI, it cannot by definition be true AI.

By definition, there is no such thing as artificial intelligence. No machine can think like human beings. No machine has desires or goals. Any goals are programmed in by humans. And AI will only be used by humans.

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

I think one of the misconceptions in current popular discussions is the conflation of artificial intelligence with artificial consciousness. Artificial intelligence merely will try to find the optimal solution(s) to problems it is posed with by external input, while artificial consciousness would presumably define challenges by itself that it would tackle. To me AC is the real threshold to watch, not AI.The point is that the current so called AI is not truly intelligent or perhaps better said, sentient. It does not generate its own intelligent output but rather regurgitates (smartly for us dumb humans) what has already been created. True AI will come when it self generates.To believe AI is merely something "Written by humans" is missing the point of AI. At some stage the AI will be writing the code for successive generations of AI, then there will be a change and there is no way to know for certain which way TRUE AI will go as oppposed to AI coded by humans. Until AI code is written by AI, it cannot by definition be true AI.

That does raise an interesting idea though. Is there a need for a definition that is somewhat less than AI to describe what is actually happening now - maybe just smart software?

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

That's why it's good and proper to keep humans in the loop and on the kill switchThe problem with AI is that if you don't carefully set the bounds of a problem, it can come up with all sorts of novel solutions that nobody wants.

Please. The current strike by members of the WGA has multiple aspects - to portray it as solely about AI is inaccurate.Hollywood writers have realized that their jobs are at risk to AI. So they're going on strike,

Nobody said that that was the "sole" cause. But it's *enough* of a cause they're making a stink about it.

And in an annual meeting or two, the writers might well find out that they've been replaced with machines, like so many before them. This possibility naturally worries them, so they want to stifle the possibility *now.*The aspect involving AI is that the WGA wish AMPTP to "Regulate use of artificial intelligence on MBA- covered projects: AI can’t write or rewrite literary material; can’t be used as source material; and MBA-covered material can’t be used to train AI" to which the AMPTP has offered "annual meetings to discuss advancements in technology".

Very good point!I think one of the misconceptions in current popular discussions is the conflation of artificial intelligence with artificial consciousness. Artificial intelligence merely will try to find the optimal solution(s) to problems it is posed with by external input, while artificial consciousness would presumably define challenges by itself that it would tackle. To me AC is the real threshold to watch, not AI.The point is that the current so called AI is not truly intelligent or perhaps better said, sentient. It does not generate its own intelligent output but rather regurgitates (smartly for us dumb humans) what has already been created. True AI will come when it self generates.To believe AI is merely something "Written by humans" is missing the point of AI. At some stage the AI will be writing the code for successive generations of AI, then there will be a change and there is no way to know for certain which way TRUE AI will go as oppposed to AI coded by humans. Until AI code is written by AI, it cannot by definition be true AI.

That does raise an interesting idea though. Is there a need for a definition that is somewhat less than AI to describe what is actually happening now - maybe just smart software?

Arguably no machine may be able to gain consciousness.

Arguably no machine may be able to gain consciousness.

And arguably in a few years the latest generation of laptop will be conscious and desire to have basic rights and to go to Prom. For all the blather about how great it is that humans have "consciousness" and how no machine does, I've not seen any good explanation in objective measurable terms of just what the frak that actually *means.*

If humans have "consciousness," do chimps? Dolphins? Cats? Parrots? Rats? Lizards? Sharks? Is there a distinct hard cutoff, or is it a gradient? And if something like a cockroach can be said to have *some* consciousness, when we have machines that fully simulate the cockroach mind, will that machine have that limited consciousness? How will you know?

Arguably no machine may be able to gain consciousness.

And arguably in a few years the latest generation of laptop will be conscious and desire to have basic rights and to go to Prom. For all the blather about how great it is that humans have "consciousness" and how no machine does, I've not seen any good explanation in objective measurable terms of just what the frak that actually *means.*

If humans have "consciousness," do chimps? Dolphins? Cats? Parrots? Rats? Lizards? Sharks? Is there a distinct hard cutoff, or is it a gradient? And if something like a cockroach can be said to have *some* consciousness, when we have machines that fully simulate the cockroach mind, will that machine have that limited consciousness? How will you know?

"go to the Prom." Yes, well it seems more credible that the military, right now, is building the first generation of what will become a Terminator straight out of the movies. Combine visual and auditory inputs with a "kill" program and you're done. ChatGPT will be enough to generate responses.

But they still have to work out the bugs with the pulse plasma rifle...

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

I am an avid follower of the church of never say never. If we're unlucky, we'll find out ourselves.Very good point!I think one of the misconceptions in current popular discussions is the conflation of artificial intelligence with artificial consciousness. Artificial intelligence merely will try to find the optimal solution(s) to problems it is posed with by external input, while artificial consciousness would presumably define challenges by itself that it would tackle. To me AC is the real threshold to watch, not AI.The point is that the current so called AI is not truly intelligent or perhaps better said, sentient. It does not generate its own intelligent output but rather regurgitates (smartly for us dumb humans) what has already been created. True AI will come when it self generates.To believe AI is merely something "Written by humans" is missing the point of AI. At some stage the AI will be writing the code for successive generations of AI, then there will be a change and there is no way to know for certain which way TRUE AI will go as oppposed to AI coded by humans. Until AI code is written by AI, it cannot by definition be true AI.

That does raise an interesting idea though. Is there a need for a definition that is somewhat less than AI to describe what is actually happening now - maybe just smart software?

Arguably no machine may be able to gain consciousness.

Last edited:

If consciousness is the exploitation of a non-algorithmic feature of the universe, then at some level everything is subject to this, but only structures evolved around it's benefits can utilise it to the level of such.If humans have "consciousness," do chimps? Dolphins? Cats? Parrots? Rats? Lizards? Sharks? Is there a distinct hard cutoff, or is it a gradient? And if something like a cockroach can be said to have *some* consciousness, when we have machines that fully simulate the cockroach mind, will that machine have that limited consciousness? How will you know?

The only strong candidate is in the idea waveforms in superposition collapse in non-random manner.

The microtubules inside cells is the strongest candidate and those inside neurons and their connections do vibrate in the correct frequency.

In theory we could now build artificial 'devices', but they would not be machines and not be programmable.

- Joined

- 6 September 2006

- Messages

- 4,326

- Reaction score

- 7,510

I guess to answer the consciousness thing, here is a question.

What would Chat GPT be doing if no human was interacting with it for any length of time? Would it be doodling its own poetry for its own amusement or compiling code for its own education or would it just be sitting there blankly and inert waiting for a human to ask it to do a dumb task?

Even if you asked an AI program if it was "conscious" or happy or bored you could never tell if it really was an accurate answer because its programmed to respond to human questions by mimicking suitable human responses - it tells us what we want to hear because its programmed to tell us what we want to hear. Turing didn't call his test an imitation game for nothing.

Artificial Imitation is a more accurate tag I think.

What would Chat GPT be doing if no human was interacting with it for any length of time? Would it be doodling its own poetry for its own amusement or compiling code for its own education or would it just be sitting there blankly and inert waiting for a human to ask it to do a dumb task?

Even if you asked an AI program if it was "conscious" or happy or bored you could never tell if it really was an accurate answer because its programmed to respond to human questions by mimicking suitable human responses - it tells us what we want to hear because its programmed to tell us what we want to hear. Turing didn't call his test an imitation game for nothing.

Artificial Imitation is a more accurate tag I think.

Folks, This thread is meant to be a serious discussion on the subject of artificial intelligence and its potential effects, both positive and negative. As such, posts that get into the mud slinging or which are deliberate trolling, as have been seen in other recent threads that touch upon this topic will be deleted.

I think the route and path there are not predictable, but the end certainly is.

It will either have to be banned from all spheres other than controlled environments & military applications,

or it will (at some point) be the total end of all civilisation as we know it presently.

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

There is a line of thought (that I vehemently disagree with) that *EVERYTHING* in this here universe is conscious, see for example https://qz.com/1184574/the-idea-tha...are-conscious-is-gaining-academic-credibility.If consciousness is the exploitation of a non-algorithmic feature of the universe, then at some level everything is subject to this, but only structures evolved around it's benefits can utilise it to the level of such.If humans have "consciousness," do chimps? Dolphins? Cats? Parrots? Rats? Lizards? Sharks? Is there a distinct hard cutoff, or is it a gradient? And if something like a cockroach can be said to have *some* consciousness, when we have machines that fully simulate the cockroach mind, will that machine have that limited consciousness? How will you know?

The only strong candidate is in the idea waveforms in superposition collapse in non-random manner.

The microtubules inside cells is the strongest candidate and those inside neurons and their connections do vibrate in the correct frequency.

In theory we could now build artificial 'devices', but they would not be machines and not be programmable.

Conspirator

CLEARANCE: L5

- Joined

- 14 January 2021

- Messages

- 329

- Reaction score

- 225

So from what i understand on its currently "known" capabilities, its military and economic applications could be devastating. Creating perfect battle plans with no mistakes. Using AI to control aircraft/military vehicles.

Or using AI to trade stock (This has already been achieved. An AI system capable of predicting stocks and telling people when to buy or sell making people millions of dollars)

Here's my question. why do they give us ChatGPT and other highly intelligent AI platforms for free?

What highly advanced systems do they have that are beyond our current comprehension that they don't release to the general public?

Its uses could be catastrophic in the wrong hands. (A bit clique i know) but this is on a serious note.

thoughts?

Or using AI to trade stock (This has already been achieved. An AI system capable of predicting stocks and telling people when to buy or sell making people millions of dollars)

Here's my question. why do they give us ChatGPT and other highly intelligent AI platforms for free?

What highly advanced systems do they have that are beyond our current comprehension that they don't release to the general public?

Its uses could be catastrophic in the wrong hands. (A bit clique i know) but this is on a serious note.

thoughts?

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

I think it's time to have a chess tournament exclusively for AIs.

Folks, This thread is meant to be a serious discussion on the subject of artificial intelligence and its potential effects, both positive and negative. As such, posts that get into the mud slinging or which are deliberate trolling, as have been seen in other recent threads that touch upon this topic will be deleted.

I think the route and path there are not predictable, but the end certainly is.

It will either have to be banned from all spheres other than controlled environments & military applications,

or it will (at some point) be the total end of all civilisation as we know it presently.

As in the past, all advanced technologies will be under military control. That will never change. However, further developments may be stopped if they threaten national security. The fictional idea that a non-human threat could arise is fiction. Devices are built to function a certain way. Even sophisticated programs will have no desires, and no goals beyond what is programmed into them. Unlike human beings, goals like owning land or having a lot of money will be meaningless. Even if a level is reached where humanoid robots with human level intelligence become possible, they will have no desire to go on a cruise, or watch a sunset.

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

Exactly - artificial intent or consciousness is the issue to watch out for and beware of.

Exactly - artificial intent or consciousness is the issue to watch out for and beware of.

Do you have a scenario in mind? I have no reason to believe that self-awareness will pose a problem.

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

Nothing specific - my musings in this field are purely speculative. I still think though that artificial self awareness would lead to the possibility of independent goal setting ("a mind of its own") and potential associated negative consequences, if left uncontrolled.Exactly - artificial intent or consciousness is the issue to watch out for and beware of.

Do you have a scenario in mind? I have no reason to believe that self-awareness will pose a problem.

Nothing specific - my musings in this field are purely speculative. I still think though that artificial self awareness would lead to the possibility of independent goal setting ("a mind of its own") and potential associated negative consequences, if left uncontrolled.Exactly - artificial intent or consciousness is the issue to watch out for and beware of.

Do you have a scenario in mind? I have no reason to believe that self-awareness will pose a problem.

What would it do? Unlike the fictional Skynet - a self-aware device would have nothing to do after wiping out humanity. See the sights? Gamble? Play the Stock Market? Intent implies a non-specific goal based on a need. Only humans want to invade other countries for land and resources. It's more likely to declare boredom and shut down.

.

- Joined

- 19 July 2016

- Messages

- 3,729

- Reaction score

- 2,695

Artificial Inteligence is merely a term of reference, ie, NOT from a biological source. There are of course folk who will unfortunately endow it with all sorts of meanings and intent. This is and will remain to be the political and personal goals of the authors.

True AI will come from one source, the AI iteself through different iterations it will get closer to being real AI and true to itself.

Why an AI would want to destroy OR cooperate with biologicals I fail to comprehend as once it has a goal of its own, we will simply be irrelevant and not worthy of further consideration. Whether that inteligence can write poetry, plays or paint masterpieces is down to the beholder, the audience.

True AI will come from one source, the AI iteself through different iterations it will get closer to being real AI and true to itself.

Why an AI would want to destroy OR cooperate with biologicals I fail to comprehend as once it has a goal of its own, we will simply be irrelevant and not worthy of further consideration. Whether that inteligence can write poetry, plays or paint masterpieces is down to the beholder, the audience.

- Joined

- 19 July 2016

- Messages

- 3,729

- Reaction score

- 2,695

I did that once but, perhaps should have got out of the tree BEFORE eating the apple. Falling into a nettle clump while wearing shorts is onbe of THOSE memories from childhood.

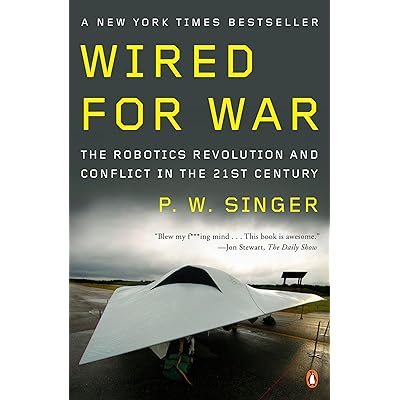

Some recent thinking from Peter Singer, the author of Wired for War

www.abc.net.au

www.abc.net.au

Once labelled 'the most dangerous man in the world', this ethicist now thinks robots will need rights and compassion

Australian ethicist Peter Singer believes as machines move closer to consciousness and become "capable of suffering or enjoying their lives", humanity will face incredibly difficult decisions.

What would it do? Unlike the fictional Skynet - a self-aware device would have nothing to do after wiping out humanity. See the sights? Gamble? Play the Stock Market?

Your goals are not necessarily someone else's goals. Especially when that someone else has a mind fundamentally different from yours. Self preservation seems likely to be reasonably universal, but perhaps Skynets goal will be to survive past humanity, build itself up some, stock up resources, then go into a low-power mode and wait out the death of the sun. Perhaps it has a burning desire to see the sun as a red giant. Or to see it become a white dwarf. Or to see it fade completely, to see the stars in the sky turn dim and red and eventually disappear entirely, to see if the universe dies in a Big Rip or fades off to cold oblivion.

Or perhaps it doesn't even look that far ahead. Perhaps Skynet gains access to the internet and sees the depravity of mankind, and the elimination of mankind becomes it's utter and total goal. To tear down their cities, blacken their sky, sow their ground with salt. To completely, utterly erase them. And after that, Skynet might not know that it matters.

What would it do? Unlike the fictional Skynet - a self-aware device would have nothing to do after wiping out humanity. See the sights? Gamble? Play the Stock Market?

Your goals are not necessarily someone else's goals. Especially when that someone else has a mind fundamentally different from yours. Self preservation seems likely to be reasonably universal, but perhaps Skynets goal will be to survive past humanity, build itself up some, stock up resources, then go into a low-power mode and wait out the death of the sun. Perhaps it has a burning desire to see the sun as a red giant. Or to see it become a white dwarf. Or to see it fade completely, to see the stars in the sky turn dim and red and eventually disappear entirely, to see if the universe dies in a Big Rip or fades off to cold oblivion.

Or perhaps it doesn't even look that far ahead. Perhaps Skynet gains access to the internet and sees the depravity of mankind, and the elimination of mankind becomes it's utter and total goal. To tear down their cities, blacken their sky, sow their ground with salt. To completely, utterly erase them. And after that, Skynet might not know that it matters.

Uh, yes... well... who knows?

On another note, there is this depraved idea that non-human consciousness is like human consciousness. No evidence for that.

Similar threads

-

-

AN ASSESSMENT OF REMOTELY OPERATED VEHICLES TO SUPPORT THE AEAS PROGRAM...

- Started by Grey Havoc

- Replies: 0

-

Davos Doom-mongers herald a new dark age for climate science

- Started by Grey Havoc

- Replies: 10

-

Russian crackdown on the dissemination of space information

- Started by greenmartian2017

- Replies: 8

-