- Joined

- 27 December 2005

- Messages

- 17,816

- Reaction score

- 27,043

Hi all - noticed site was sluggish and have discovered thousands of open connections emanating from Alibaba's Singapore datacentre.

Not sure if its intended to take down the forum, or they are cloning the forum posts or something,

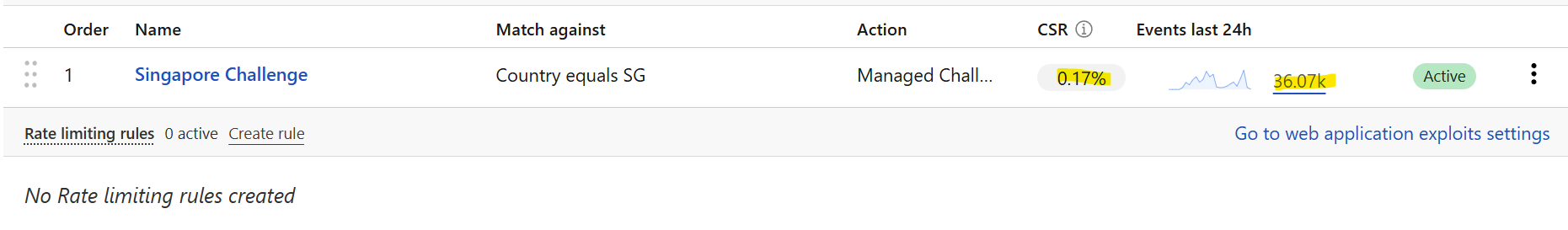

I have completed a planned future transition to using Cloudflare WAF/DDOS protection for the site earlier than intended. This will progressively kick in over the next 24 hours.

As a temporary measure users from Singapore will have to solve a challenge to view the site. This is hopefully temporary while I figure out what is going on.

Not sure if its intended to take down the forum, or they are cloning the forum posts or something,

I have completed a planned future transition to using Cloudflare WAF/DDOS protection for the site earlier than intended. This will progressively kick in over the next 24 hours.

As a temporary measure users from Singapore will have to solve a challenge to view the site. This is hopefully temporary while I figure out what is going on.

Last edited: