southwestforests

ACCESS: Secret

- Joined

- 28 June 2012

- Messages

- 435

- Reaction score

- 561

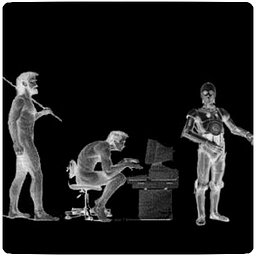

It can merely be the available things which the AI can reference,, for instance,Without more data... *maybe.* It could well be that the humans had been actively using "affirmative action" thinking in order to artificially inflate race and sex based quotas... and the AI hadn't been trained that way, instead it looked only at actual qualifications. Meritocracy can look like bigotry if you're not qualified.

October 10, 20186:04 PM Updated 5 years ago

Amazon scraps secret AI recruiting tool that showed bias against women

By Jeffrey DastinAmazon scraps secret AI recruiting tool that showed bias against women

Amazon.com Inc's <AMZN.O> machine-learning specialists uncovered a big problem: their new recruiting engine did not like women.

That is because Amazon’s computer models were trained to vet applicants by observing patterns in resumes submitted to the company over a 10-year period. Most came from men, a reflection of male dominance across the tech industry.

In effect, Amazon’s system taught itself that male candidates were preferable. It penalized resumes that included the word “women’s,” as in “women’s chess club captain.” And it downgraded graduates of two all-women’s colleges, according to people familiar with the matter. They did not specify the names of the schools.

Amazon edited the programs to make them neutral to these particular terms. But that was no guarantee that the machines would not devise other ways of sorting candidates that could prove discriminatory, the people said.

Also

Amazon’s sexist AI recruiting tool: how did it go so wrong?

Julien Lauret

Becoming Human: Artificial Intelligence Magazine

18 min read

Aug 16, 2019

Amazon’s sexist AI recruiting tool: how did it go so wrong?

Machine learning projects are hard. “Biased data” is only one element

becominghuman.ai

becominghuman.ai

But what happened at Amazon, and why did they fail? We can learn many valuable lessons from a high profile failure. The Reuters’ article and the subsequent write-ups by Slate and others are written for a general news-reading audience. As an AI practitioner myself, and I’m more interested in the technical and business details. I was frustrated by how shallow — and sometimes wrong — most of the reporting was. Surely some professionals and enthusiasts would like a more in-depth write-up?

Below is my attempt at a post-mortem case study of the Amazon project. I hope you find it interesting.

A disclaimer before we start: I’ve never worked for Amazon, I don’t know personally the people involved, and I’m certainly not privy to any confidential information. Don’t expect that piece to divulge any secret. The analysis below draws on my own experiences and whatever information is publicly known from news sources.

The piece is long, so let’s cut to the chase. AI projects are complicated pieces of business and engineering, and there is more to it than “AI bias” and “unbalanced data.” Algorithms aren’t morally biased. They have no agency, no consciousness, no autonomy, and no sense of morals. They do what their designers ask them to do. Even data isn’t biased. It’s only data. It means that to understand what happened at Amazon — or in any AI project — we need to understand the human designers, their goals, the resources they had, and the choices they had to make.