Just think... once relieved of the hardship of writing songs and slapping paint on cancas, these poor artsy folks will be freed to, say, spend their days improving themselves in the underground lithium mines or scraping fatbergs off the walls of sewer lines. The dream!And thanks to AI, soon those poor put upon artistic types will be relieved of the burden of all that hard work.Drudgery? ... A lot of hard work.

To quote a famous person: Double pfffftttt !

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The potential effect of Artificial Intelligence on civilisation - a serious discussion

- Thread starter GTX

- Start date

Don't despair! Your new AIverlords will doubtless find something for you to do in the new laborless future.Triple pfffftttt !

Remember how in "Star Trek: TNG" Picard was forever yammering on about how people didn't work to accumulate wealth anymore, but to "improve themselves?" Just imagine how improved people will be when every conceivable form of occupation can be done infinitely faster by machines. When the world is so flooded by tsunamis of product that no human could ever even *dream* of being noticed. When the world will be full of wealth, but almost nobody will have any possible way of getting any of it.

Yeah. It'll be a hoot.

I see. So, I will avoid this since human creativity is far superior to mere production output. Flooded with product, I will still buy the things I always buy. Besides, neither I nor the rest of the world can afford a massive pile of soon-to-be-garbage...

Would you pair please cut out your constant bickering. It is tiresome.

The impact of AI and automation on business

This month I was fortunate to join an elite group of Australia’s most successful AI and automation ‘rock stars’, brought together by the team at 6 Degrees media , to share critical insights...

www.suekeay.com

Kat Tsun

I really should change my personal text

- Joined

- 16 June 2013

- Messages

- 1,126

- Reaction score

- 1,259

The report I read was that the AI was rejecting *better* qualified minority candidates in favor of non-minorities.

Then the criteria should be able to be determined in the coding, yes?

What coding? A large neural network codes itself. It's finding patterns in data and generating outputs for matching these patterns. Sometimes it finds very weird patterns that people can't manage with simple controls. This appears to be one of those times. Maybe.

The report I read was that the AI was rejecting *better* qualified minority candidates in favor of non-minorities.

Then the criteria should be able to be determined in the coding, yes?

What coding? A large neural network codes itself. It's finding patterns in data and generating outputs for matching these patterns.

It cannot do anything outside of its programming. This is obviously an outgrowth of military pattern recognition technology for text and images. Outputs are outputs.

Forest Green

ACCESS: Above Top Secret

- Joined

- 11 June 2019

- Messages

- 5,102

- Reaction score

- 6,698

Who programs the computer though?I saw the same arguments in school re: calculus. "You'll need to be able to do this to be an engineer!" Then I got out into the world and found that *nobody* used calculus. That's what computers were for.

Kat Tsun

I really should change my personal text

- Joined

- 16 June 2013

- Messages

- 1,126

- Reaction score

- 1,259

The report I read was that the AI was rejecting *better* qualified minority candidates in favor of non-minorities.

Then the criteria should be able to be determined in the coding, yes?

What coding? A large neural network codes itself. It's finding patterns in data and generating outputs for matching these patterns.

It cannot do anything outside of its programming.

There is no "programming" beyond machine learning algorithms. You give it data which has been labeled by manual sorting, assign weights, and a series of machine learning algorithms does a bunch of math with incredibly powerful parallel processors which proceeds to find patterns, and then outputs a result. There's no actual method by which people can predict what results it will create. That's the main issue at hand!

It results in odd things, sometimes things that require months of reverse engineering to figure out how an algorithm produced that result, and we're entering into the third AI winter so those necessary months aren't going to be paid much.

This is obviously an outgrowth of military pattern recognition technology for text and images.

No, it's not. The algorithms used in modern ML AI originated in the 1980's with LISP machines. Computing power wasn't up to snuff in the mid-1980's to use these new algorithms and it took a few decades for that to happen. I've literally mentioned this three times now.

"Military pattern recognition technology" is used in Photoshop for edge-detection with magic wand. The mathematics in machine learning are a bit more involved than simple edge detection, or contrast matching, which are things that ML can use but are not innate to it.

Population modeling of wild geese have more in common with ML, tbh.

The report I read was that the AI was rejecting *better* qualified minority candidates in favor of non-minorities.

Then the criteria should be able to be determined in the coding, yes?

What coding? A large neural network codes itself. It's finding patterns in data and generating outputs for matching these patterns.

It cannot do anything outside of its programming.

There is no "programming" beyond machine learning algorithms. You give it data which has been labeled by manual sorting, a series of machine learning algorithms does a bunch of math with incredibly powerful parallel processors, proceeds to find patterns, and then outputs a result. There's no actual method by which people can predict what results it will create. That's the main issue at hand!

This is obviously an outgrowth of military pattern recognition technology for text and images.

No, it's not. "Military pattern recognition technology" is used in Photoshop for edge-detection with magic wand. The mathematics in machine learning are a bit more involved than simple edge detection, or contrast matching, which are things that ML can use, but are not innate to it.

"proceeds to find patterns" How does it "know" what to look for? The outputs had better be useful consistently, or it stops being used. When I refer to the military, I'm talking about hundreds of photo interpreters in rooms trying to locate a camouflaged vehicle in dense foliage. How much easier would it be if a machine had visual data it could use to locate a certain camouflage pattern under certain lighting conditions?

Kat Tsun

I really should change my personal text

- Joined

- 16 June 2013

- Messages

- 1,126

- Reaction score

- 1,259

"proceeds to find patterns" How does it "know" what to look for?

By looking at the past.

Provided it has fairly accurate information labeling in the dataset, an ML LLM can predictably tell us Newton's laws and that these won't change in the future, because they are laws, not theories.

In other fields, consider that population modeling of wild animals often uses historical information of die-offs and climate modeling to determine the population of brine shrimp or geese. Then, supposing if the temperature of a swampy region rises by 0.3 C or the level of a briny lake increases by 8% over the next 10 years, we can know how much these animals will be affected by these events because they happened in similar amounts before.

It's not literally true, perhaps there's some sort of switchover at 2 to 3 C average water temperature that can be exacerbated by a heat wave, which causes the brine shrimp to shed their shells and dissolve, but it's the best we have to guide decision makers.

No one can predict the future perfectly accurately, but some models are more accurate than others, and most importantly, some tests are stringent than others, which leads us to...

The outputs had better be useful consistently, or it stops being used.

...how "useful" is a internal memo or soulless corporate speak to a CEO? Probably not much. How "accurate" does language need to be? Can you restate Newton's laws in a general sense, or do they need to be spoken perfectly verbatim, lest they cease to apply to you? Language is a bit finicky in that it can be as broad or as narrow as you want, and ML algorithms have to choose between narrower application and higher accuracy or wider application and lower accuracy.

LLM can accurately replicate the writing ability of a typical corporate intern, with some useful information, and some information that is probably wrong.

This isn't "bad" because LLM isn't trying to be anything else, at least from the perspective of the actual engineers, although the marketing team behind whichever flavor of LLM is in the news this week is no doubt touting it big. Language is far less stringent than the natural world of swamps and brine shrimp, but it's probably more important in a decisionmaking, because it determines the sort of decisions that powerful people will make.

I don't think LLM is coming for actual knowledge domain specialists like PhD engineers or anything. Its developers are scanning thousands of questionably acquired textbooks for bachelor's engineers, labeling them (probably poorly), and it's giving you sometimes incorrect blurbs about them, if not outright inventing things. This is probably an intractable issue of the nature of pattern matching and the mathematical laws that govern it.

A more accurate LLM would be very terse and verbatim. A less accurate one is flowery and expository. These have different use cases and I could see the latter being used to produce written pornography/"romance" novels and the former being used for actual memos and legal memo writing for C&Ds.

In a world where people literally get into fights in courts over getting 0.0002 seconds less latency due to slightly closer proximity to a stock exchange, I don't think it's entirely out of the question that shaving a cumulative 30-45 minutes off a lawyer's work week filling out fields in a C&D would be seen as an economical decision. People are already trying this out.

The worst part about LLM is that they might end up bulldozing a lot of copyright law by applying inadequate penalties for IP theft. Pay $15 million in damages to three publishers because you stole their books yeah okay buddy no problem and then Art Twitter tries to sue and everyone gets $5.78 in 12 years after the legal fees are subtracted.

The second worst part about LLM is that their fan club attracts some of the people who would most likely be put out of work by them. There's a truly bizarre clade of "tech bro" who seems to want to believe technology is the best thing ever, despite decades of being dunked on by it, and anyone who actually works in these fields (AI, programming, computer, IT, etc.) tends to hate technology. I recently had to change the garage door opener in my home and instead of a little remote that you push a button on to open it, it has to be connected to a cell phone which needs an internet connection, and if the garage door opener doesn't have a live wi-fi signal it can't receive the open signal from the phone that's 10 feet away from it. Very strange design choice, really.

Apparently someone is busy buying these Wi-Fi enabled "smart" garage door openers and they are probably the same people who think LLMs are cool and ultra goodly for society, though.

Suffice to say, LLMs are probably going to stick around more than LISP machines did. A lot of the 1980's research into AI went into stuff like Lycos and Google and AltaVista for pattern matching of search terms in WWW databases in the mid-1990's, so maybe they'll find a use replacing phone menus or something. Unfortunate because I guess it means that people who pioneered one of the biggest thefts of IP in human history will just sort of get away with it.

Last edited:

A reply lacking any real world examples.

valawyersweekly.com

valawyersweekly.com

The promise and peril of artificial intelligence in patent law | Virginia Lawyers Weekly

As in virtually all walks of life these days, artificial intelligence is making its way through the legal industry — patent law included. In some corners of the profession, the advent of AI is being greeted with enthusiasm and optimism, and in others, skepticism and pessimism. What’s undeniable...

And not only get away with it, but they are working hard on lobbying 'AI safety' regulations so nobody else can repeat it after them.Unfortunate because I guess it means that people who pioneered one of the biggest thefts of IP in human history will just sort of get away with it.

And not only get away with it, but they are working hard on lobbying 'AI safety' regulations so nobody else can repeat it after them.Unfortunate because I guess it means that people who pioneered one of the biggest thefts of IP in human history will just sort of get away with it.

It is rare for a company to go to the government so it, not the company, controls/regulates it. How much electricity and how much equipment is required to imitate this is stated as unknown. Sure. That's how Wall Street works. Everything is secret, except to investors. So Microsoft is putting billions into this but doesn't know anything about where it's going? Give me a break.

Kat Tsun

I really should change my personal text

- Joined

- 16 June 2013

- Messages

- 1,126

- Reaction score

- 1,259

And not only get away with it, but they are working hard on lobbying 'AI safety' regulations so nobody else can repeat it after them.Unfortunate because I guess it means that people who pioneered one of the biggest thefts of IP in human history will just sort of get away with it.

More or less. I suppose while the 1980's nostalgia for being able to "aid/replace doctors with LISP machines" hasn't worn off, the more practical issue is that people in positions of journalism (or right now, just paralegals) will be written out of the field.

At the very least that means that corporations will have even more power over the flow of information when they can hold jobs over the heads of writers and editors more than they do now. It's one thing to say we can replace you but they actually will be able to replace people with robots in the future and just claim "AI protection" instead of fraud when it produces a bad story article, making them immune to both legal repercussion and moral ones.

The real danger is therein that AI isn't being squashed immediately by fiat, or simple arrests, but being mired in "law" and "regulation" which ensures its continued survival in its current owners' hands. It wouldn't be anywhere near as bad if ML models were simply made public infrastructure or something, but that applies to the Internet at large really, since there are very few viable business models in traditional profit schemes for Web-based business.

Aside from renting physical server infrastructure for people's data and computational needs, there's precious little money to be made virtually for the most part, and most web stuff on the Internet we take for granted operates on donations and actively bleeds money, like Twitter, Facebook, Amazon's entire web business, and Reddit, to name a few.

Cross your fingers there's a big breach of OAI data security that leaks LLMs onto the clearnet like happened with image/photo GANs. At least that will level the playing field for disinformation and propagandists. It will also be cool to run local copies of LLMs in server farms, and that would help clear up the rent seekers a tad too.

Last edited:

- Joined

- 3 June 2011

- Messages

- 17,332

- Reaction score

- 9,070

- Joined

- 3 June 2011

- Messages

- 17,332

- Reaction score

- 9,070

I feel like this is one of those fake advocacy organisations funded by the interests they are supposed to fight against, a time-tested method pioneered by Big Oil and Big Tobacco.

This seems like a well-constructed scaremongering video, probably they are building up for the big push to manufacture consent to push whatever they want through legislators.

Lol @ Big Tech being concerned about 'privacy' and 'abuse' concerns. Aren't they the ones who sell you smartphones and home assistants that log and capture every conversation you have, who store every second of video coming from your smart doorbell since the second it was installed?

And that's just what they have done so far, without big scary AI. And now they are concerned what the plebes might do with AI.

Edit: After getting halfway through the video, it seems the point they are trying to make is they make the admission that current Social Media companies have ruined society, and to prevent AI doing the same is that we should restrict AI usage to the same set of companies, and give them a bunch of rules to make them promise they'll be good.

This seems like a well-constructed scaremongering video, probably they are building up for the big push to manufacture consent to push whatever they want through legislators.

Lol @ Big Tech being concerned about 'privacy' and 'abuse' concerns. Aren't they the ones who sell you smartphones and home assistants that log and capture every conversation you have, who store every second of video coming from your smart doorbell since the second it was installed?

And that's just what they have done so far, without big scary AI. And now they are concerned what the plebes might do with AI.

Edit: After getting halfway through the video, it seems the point they are trying to make is they make the admission that current Social Media companies have ruined society, and to prevent AI doing the same is that we should restrict AI usage to the same set of companies, and give them a bunch of rules to make them promise they'll be good.

Last edited:

Home - Data + AI Summit 2023 | Databricks

The premier event for the global data, analytics and AI community returns to San Francisco June 26-29.

klem

I really should change my personal text

- Joined

- 7 March 2015

- Messages

- 618

- Reaction score

- 1,247

Artificial intelligence and its challenges for the defense (L’intelligence artificielle et ses enjeux pour la Défense). Theme of the "Revue Défense Nationale" 2019/5 (N° 820).( https://www.defnat.com/sommaires/sommaire.php?cidrevue=820 )-( https://www.cairn.info/revue-defense-nationale-2019-5.htm )

Attachments

A useful development IMHO:

www.heritagedaily.com

www.heritagedaily.com

Would be interesting to see what is learnt as a result.

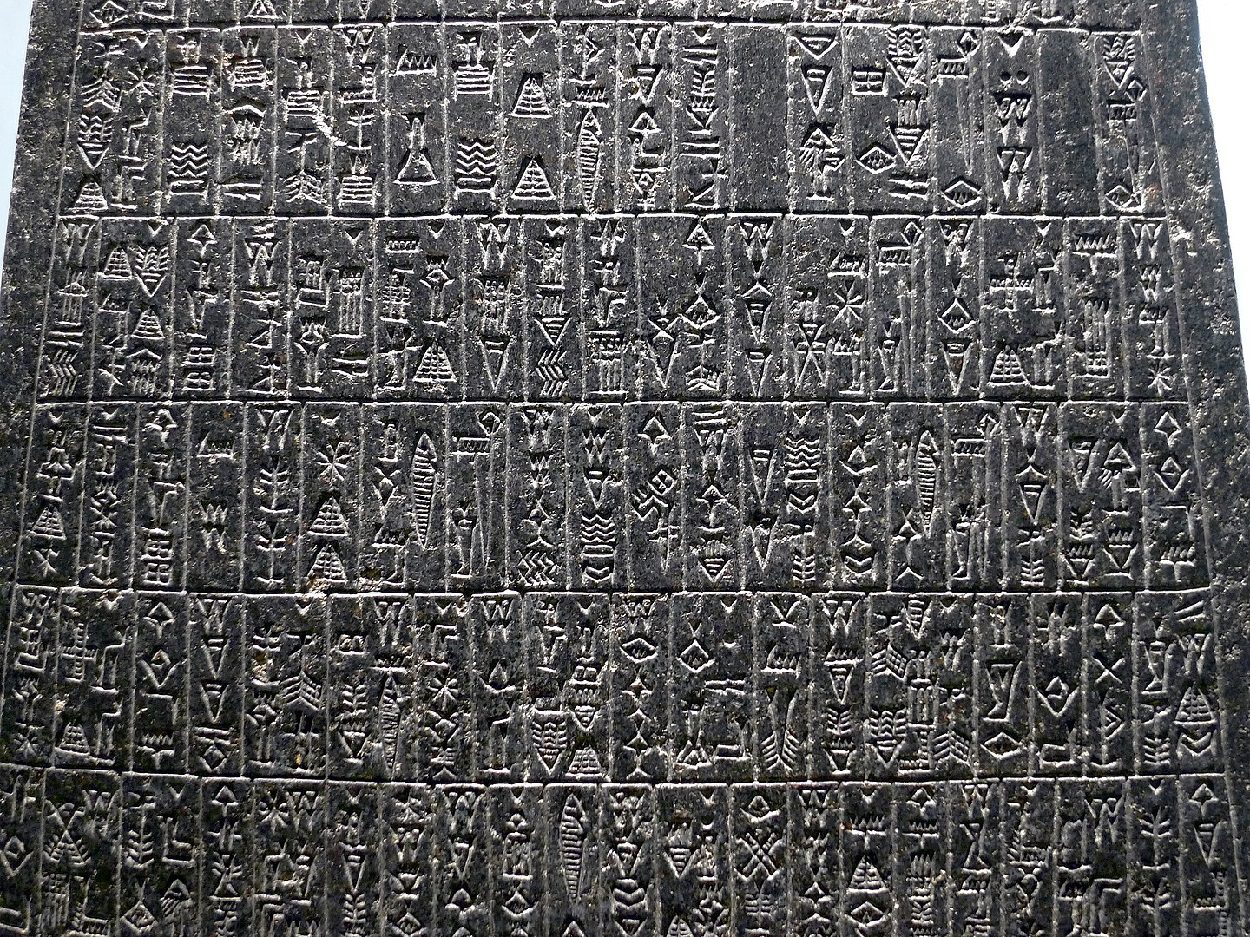

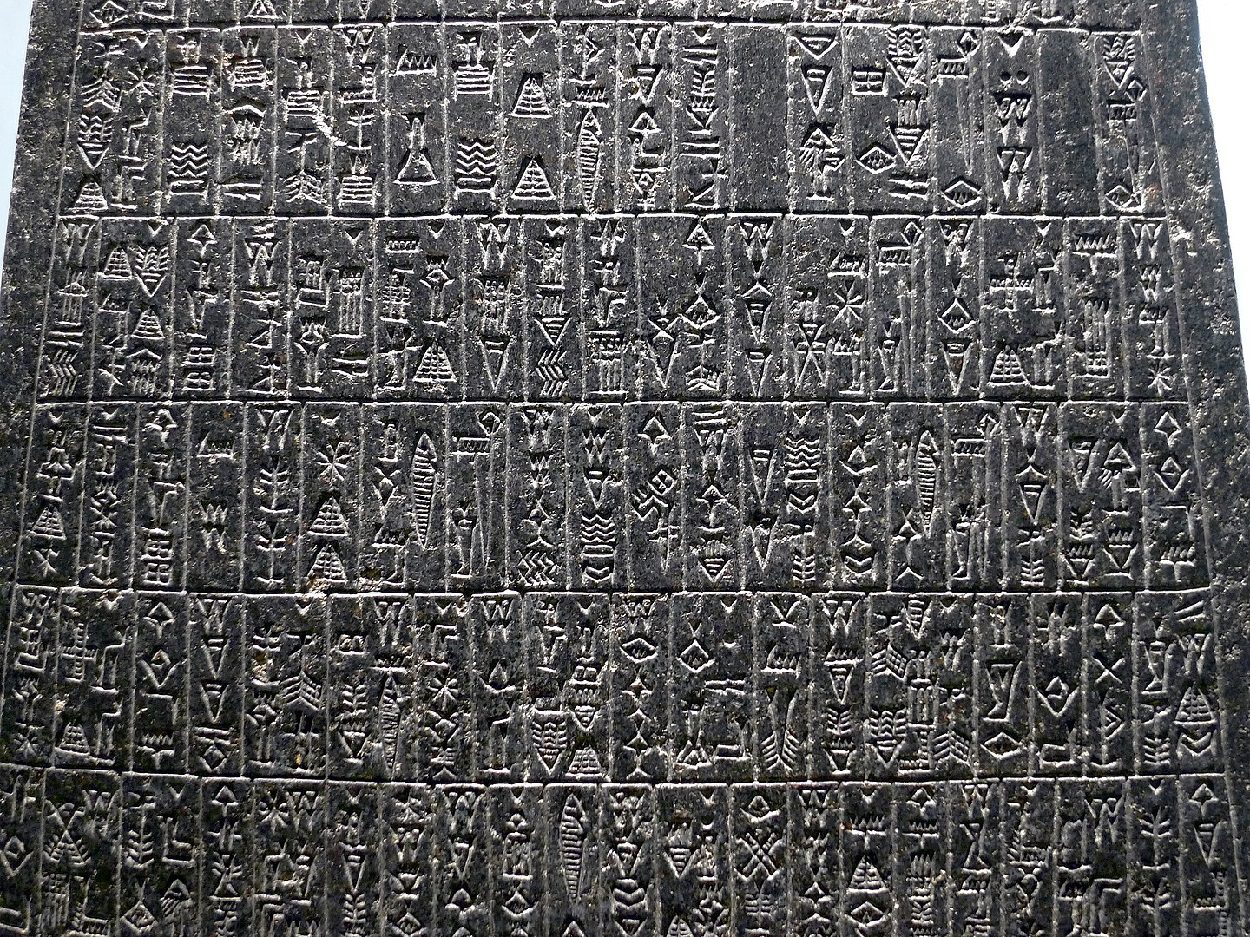

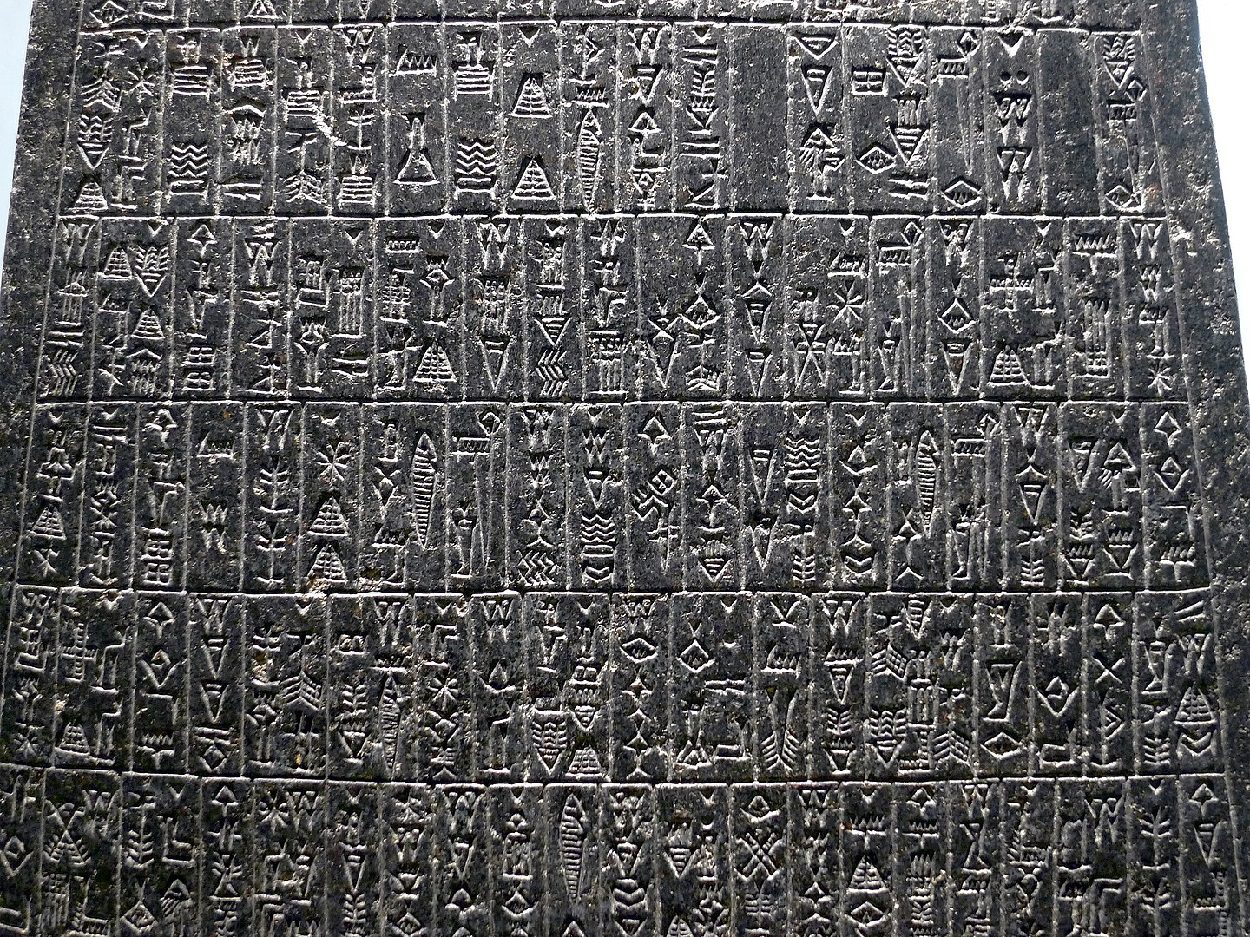

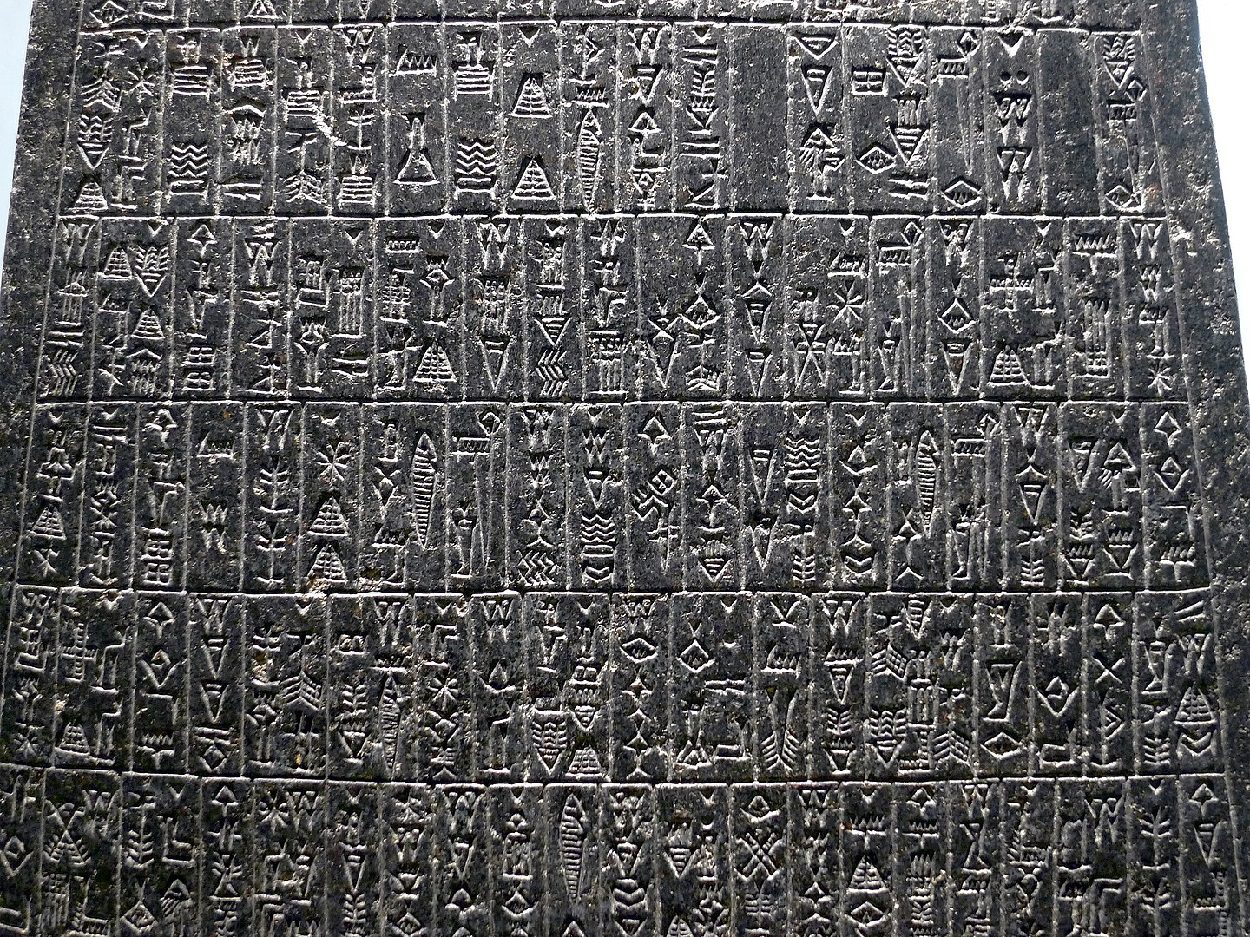

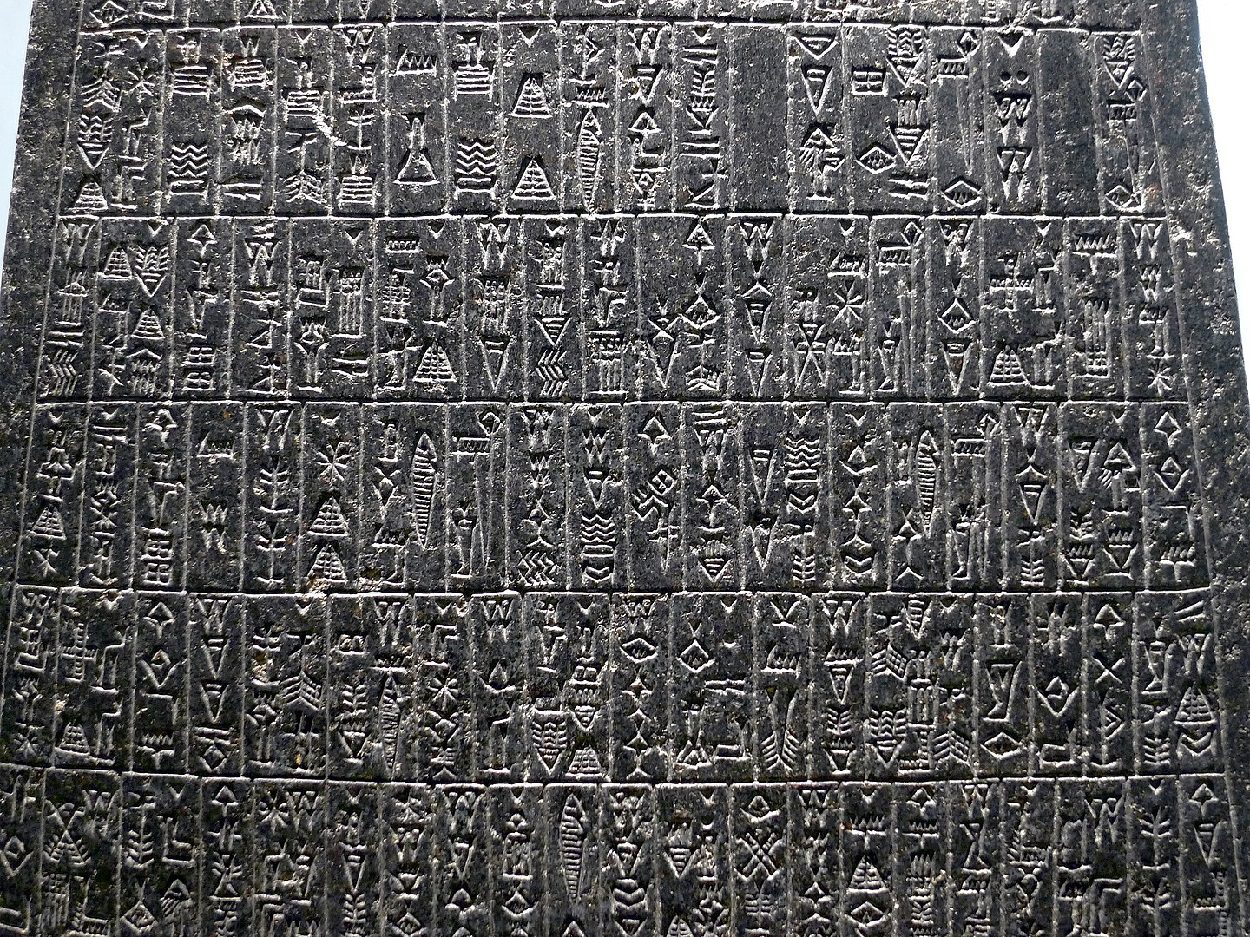

Archaeologists use artificial intelligence (AI) to translate 5,000-year-old cuneiform tablets

A team of archaeologists and computer scientists have created an AI program that can translate ancient cuneiform tablets instantly using neural machine learning translations. - HeritageDaily - Archaeology News

www.heritagedaily.com

www.heritagedaily.com

Would be interesting to see what is learnt as a result.

A useful development IMHO:

Archaeologists use artificial intelligence (AI) to translate 5,000-year-old cuneiform tablets

A team of archaeologists and computer scientists have created an AI program that can translate ancient cuneiform tablets instantly using neural machine learning translations. - HeritageDaily - Archaeology Newswww.heritagedaily.com

Would be interesting to see what is learnt as a result.

Not too much, for the most part these tablets contain accounting records, although perhaps some contain instructions for building Noah's ark.

If you believe that sort of thing.

, although perhaps some contain instructions for building Noah's ark.

- Joined

- 25 July 2007

- Messages

- 3,873

- Reaction score

- 3,181

A useful development IMHO:

Archaeologists use artificial intelligence (AI) to translate 5,000-year-old cuneiform tablets

A team of archaeologists and computer scientists have created an AI program that can translate ancient cuneiform tablets instantly using neural machine learning translations. - HeritageDaily - Archaeology Newswww.heritagedaily.com

Would be interesting to see what is learnt as a result.

Not too much, for the most part these tablets contain accounting records ...

Those "accounting records" are pure gold for Assyriologists and other Ancient Near East studies. Most ANE trade routes have been uncovered due to the translating of such 'mundane' record-keeping. (And, yes, that is important for understanding the origins of human civilization.)

Remember, for example, that archeology is a study where invaluable details are found in palimpsest manuscripts - what we'd now call notes scribbled on recycled scrap paper. And archeologists spend much of their time excavating middens. You may not want to dig in refuse heaps but I, for one, am grateful that archeologists do.

Sure, the cataloguing of receipts for the ancient textile and tin trade to Anatolia is not for everyone. Then again, none of my neighbours would agree that obsessing over old airplanes is a good use of one's time either ...

AI News - Artificial Intelligence News

Artificial Intelligence News provides the latest AI news and trends. Explore industry research and reports from the frontline of AI technology news.

What is interesting for experts is not usually interesting for amateurs.A useful development IMHO:

Archaeologists use artificial intelligence (AI) to translate 5,000-year-old cuneiform tablets

A team of archaeologists and computer scientists have created an AI program that can translate ancient cuneiform tablets instantly using neural machine learning translations. - HeritageDaily - Archaeology Newswww.heritagedaily.com

Would be interesting to see what is learnt as a result.

Not too much, for the most part these tablets contain accounting records ...

Those "accounting records" are pure gold for Assyriologists and other Ancient Near East studies. Most ANE trade routes have been uncovered due to the translating of such 'mundane' record-keeping. (And, yes, that is important for understanding the origins of human civilization.)

Remember, for example, that archeology is a study where invaluable details are found in palimpsest manuscripts - what we'd now call notes scribbled on recycled scrap paper. And archeologists spend much of their time excavating middens. You may not want to dig in refuse heaps but I, for one, am grateful that archeologists do.

Sure, the cataloguing of receipts for the ancient textile and tin trade to Anatolia is not for everyone. Then again, none of my neighbours would agree that obsessing over old airplanes is a good use of one's time either ...

Attachments

Kat Tsun

I really should change my personal text

- Joined

- 16 June 2013

- Messages

- 1,126

- Reaction score

- 1,259

A lawyer used ChatGPT to prepare a court filing. It went horribly awry.

As part of an airline passenger's lawsuit, the AI invented relevant cases that didn't exist and insisted they were real.

lol

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

It sure looks like Weinberg’s Law of "If builders built buildings the way programmers wrote programs, then the first woodpecker that came along would destroy civilization" still holds true in this day and age...

I don't think this is unique to programmers.It sure looks like Weinberg’s Law of "If builders built buildings the way programmers wrote programs, then the first woodpecker that came along would destroy civilization" still holds true in this day and age...

The production of every piece of military equipment tends to be a balancing act between 'how many years of delays are we allowed to accept' and 'how broken is the final product is allowed to be'

TBF it's ChatGPT doing a great job as a predictive text engine: it fooled this lawyer with its fake references and fake cases. WAD.

It didn't fool anyone except this particular fool. Hmm, perhaps his thinking went, I'll try this umproven, already shown to be error-prone thing and save some time. Stupid is as stupid does. Sad.

I don't think this is unique to programmers.It sure looks like Weinberg’s Law of "If builders built buildings the way programmers wrote programs, then the first woodpecker that came along would destroy civilization" still holds true in this day and age...

The production of every piece of military equipment tends to be a balancing act between 'how many years of delays are we allowed to accept' and 'how broken is the final product is allowed to be'

Excuse me? When developing new equipment, especially for emergencies like World War II, the engineers need to test whatever it is rigorously. In final production, a certain level of quality control is required. I knew a man who did such work regarding U.S. tanks.

OpenAI Hit With Class Action Over ‘Unprecedented’ Web Scraping

The generative artificial intelligence company OpenAI LP was hit with a wide-ranging consumer class action lawsuit alleging that company’s use of web scraping to train its artificial intelligence models misappropriates personal data on “an unprecedented scale.”

jeffb

ACCESS: Top Secret

- Joined

- 7 October 2012

- Messages

- 1,227

- Reaction score

- 1,722

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

As a German diploma (yes, we are a dying breed...) degreed aerospace engineer working in US corporate liability risk reduction (engineering degrees beat law degrees every time, since violating natural laws is much harder than violating legal onesTBF it's ChatGPT doing a great job as a predictive text engine: it fooled this lawyer with its fake references and fake cases. WAD.

Last edited:

- Joined

- 6 November 2010

- Messages

- 4,228

- Reaction score

- 3,167

I may be guilty of slandering an entire profession here, but I recall reading somewhere - unfortunately I can't remember where - that of all university students, law students were the hardest to convince of the statisticians' position in the three doors problem.

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

I'd like to put Shakespeare's quote from https://en.wikipedia.org/wiki/Let's_kill_all_the_lawyers on the record, at the risk of straying too far off topic...

Last edited:

Similar threads

-

-

AN ASSESSMENT OF REMOTELY OPERATED VEHICLES TO SUPPORT THE AEAS PROGRAM...

- Started by Grey Havoc

- Replies: 0

-

Davos Doom-mongers herald a new dark age for climate science

- Started by Grey Havoc

- Replies: 10

-

Russian crackdown on the dissemination of space information

- Started by greenmartian2017

- Replies: 8

-