Kat Tsun

eeeeeeeeeeeeeee

- Joined

- 16 June 2013

- Messages

- 1,371

- Reaction score

- 1,778

Not even remotely the same. The correct analogy: someone takes all of my, say, US Fighter Projects, redraws the diagrams (perhaps as 3D full color renderings), re-writes the text in their own words and publishes the result under their own name.

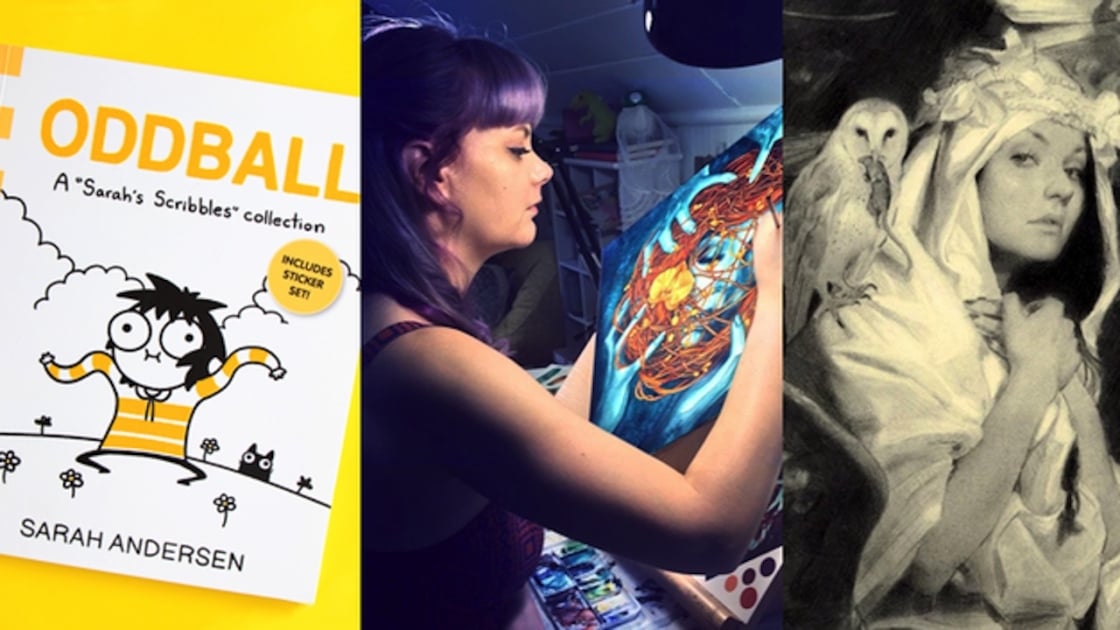

Not in the slightest. This isn't even an analogy needed, because we know what this is: It's taking someone's work, without remuneration or consent, putting it into a database, and running it through a training algorithm to regurgitate it in mangled form, in order to sell it to people using mobile apps. It's plagiarism at best, intellectual property theft at worst, and one of these is grounds for a civil suit. It depends on the exact firm, their algorithm and database and how both are maintained and added to, and how they approach it with authors in particular.

I know of a couple firms that use open source and public domain works explicitly to sidestep this problem, except you get similar issues with image generating GANs using scraping of sites like pixiv (Japan's version of DeviantArt) or Twitter, again without permission.

A film studio or video game publisher has to pay royalties, or acquire a license or some other form of legal permission, to adapt someone's book to a script. AI should be no different for putting someone's work into a database. Someone should be allowed to sign a legally binding contract and be informed that their work is being included in a database. Not silently stolen from the Internet by a web spider.

The most ethical solution is to tie everything up in a mass class action lawsuit, in order to establish proper boundaries for AI firms to pay authors and publishers to use their works, if they desire this, and provide proper remuneration for reuse of their works.

If AI is in any way profitable it will be able to cover this. Just like film studios or games publishers do when they make things.

The disparity is that the AI trained on the texts is not there to, nor will it, redistribute the works. It has simply absorbed the knowledge within in ways that mimic our own memory.

No one in the lawsuit cares. The important part is that someone, a human being, or an AI web spider's corporate person legal owner, took that work without permission, without remuneration, and used it to generate a profit. That's all that matters. How the profit is generated is immaterial. Unless AI doesn't generate a profit, of course, then why are we bothering with it.

Large language models don't "learn" either, as they're statistical models. An analogy is that someone put it through a paper shredder, reprinted the mess after reassembling some legible text from the shreds, and claimed it was an original work.

That's not how copyright works, unfortunately. That's not how it should work, either. If you want to debate that, you can start publishing your own works and patents under a copyleft license or something, and make no money from it, then perhaps contribute that to your favorite AI databases. That would be pretty honest, but foolish, at least.

Last edited: