Ethics consists in not allowing the great crimes against humanity such as the Inquisition, the Holocaust or the population adjustments effected by communism to be forgotten.

Vatican-approved ethics guide: Keep humanity focus of new tech

“If we build the future badly, we will live in a terrible world,” the handbook warns Big Tech.www.catholicnewsagency.com

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The potential effect of Artificial Intelligence on civilisation - a serious discussion

- Thread starter GTX

- Start date

A more accurate analogy would be like marriage: intelligence, patience, adaptability... basically the end of freedom.But could we still remain friends?

- Joined

- 19 July 2016

- Messages

- 3,728

- Reaction score

- 2,694

A "Vatican approved ethics guide"? What does that contain? No LGBTQ? Protect church approved child molestation? Protect church approved theft of children? Deny women the right to abortion because the church does not like it? What on earth makes the church believe it is so moral, ethical and so damn right to interfere in the lives of non believers?

Little wonder putin promotes his christianity.

Little wonder putin promotes his christianity.

In today's world there are women, atheists, Jews and homosexuals willing to risk their freedom and even their lives to allow a Catholic or a Muslim to freely practice their religion because that is the value system that has proven to work best.

No one wants to live in a world with an infant mortality of fifty percent and a life expectancy of 37 years, a world in which sea bathing, anesthesia, contraceptives, potatoes, tomatoes and cats are forbidden for religious reasons, where justice admits as evidence a confession made under torture about an accusation of witchcraft based on having a spot on the skin, where people are born and die for generations within a few meters of a city walls, where paedophilia, racism and slavery are tolerated, where doctors and scientists are burned alive, where population adjustments consist of organizing a children's crusade.

That world existed and dominated most of the known world for a thousand years. Part of him still exists, playing with his swords, his dungeons and his dragons in reality and in consciousness, waiting for a comet, a virus or a dictator to restore his power.

And from time to time they provoke a little to see if the opposition weakens.

No one wants to live in a world with an infant mortality of fifty percent and a life expectancy of 37 years, a world in which sea bathing, anesthesia, contraceptives, potatoes, tomatoes and cats are forbidden for religious reasons, where justice admits as evidence a confession made under torture about an accusation of witchcraft based on having a spot on the skin, where people are born and die for generations within a few meters of a city walls, where paedophilia, racism and slavery are tolerated, where doctors and scientists are burned alive, where population adjustments consist of organizing a children's crusade.

That world existed and dominated most of the known world for a thousand years. Part of him still exists, playing with his swords, his dungeons and his dragons in reality and in consciousness, waiting for a comet, a virus or a dictator to restore his power.

And from time to time they provoke a little to see if the opposition weakens.

Folks, a warning to stay on topic.

- Joined

- 19 July 2016

- Messages

- 3,728

- Reaction score

- 2,694

Agreed but, there will be a need for something like the three laws of robotics in the AI mix and asking the question of "Who is going to be most influential in this process", is important, we just have to know that certain institutions will push for involvement if not lead.Folks, a warning to stay on topic.

When the police chief realizes that he cannot stop the anger of the peasants, he places himself at the head of the demonstration. Cui prodest?Agreed but, there will be a need for something like the three laws of robotics in the AI mix and asking the question of "Who is going to be most influential in this process", is important, we just have to know that certain institutions will push for involvement if not lead.

Attachments

- Joined

- 16 December 2010

- Messages

- 2,841

- Reaction score

- 2,084

What seems to be emerging is that 'human curation' for want of a better term seems to be needed at this stage.

In the linked article Microsoft blames 'human error' for an AI generated 'listicle' recommending the Ottowa Food Bank as a place for tourists to visit. I seriously doubt any human read the list.

www.theverge.com

www.theverge.com

In the linked article Microsoft blames 'human error' for an AI generated 'listicle' recommending the Ottowa Food Bank as a place for tourists to visit. I seriously doubt any human read the list.

Microsoft says listing the Ottawa Food Bank as a tourist destination wasn’t the result of ‘unsupervised AI’

“We have identified that the issue was due to human error.”

Gaafar

ACCESS: Confidential

- Joined

- 11 August 2021

- Messages

- 70

- Reaction score

- 70

AI was created by humans for humans and personally, I think humans won't let AI replace them to the point of writing code for AI. I think humans (especially the press) will create policies for AI the way they did with nuclear weapons back then.To believe AI is merely something "Written by humans" is missing the point of AI. At some stage the AI will be writing the code for successive generations of AI, then there will be a change and there is no way to know for certain which way TRUE AI will go as oppposed to AI coded by humans. Until AI code is written by AI, it cannot by definition be true AI.

- Joined

- 19 July 2016

- Messages

- 3,728

- Reaction score

- 2,694

The term AI is in fact disingenuous. Working from a series of algorithms written hy biological entities cannot by definition, be artificial.

Gaafar

ACCESS: Confidential

- Joined

- 11 August 2021

- Messages

- 70

- Reaction score

- 70

Either way, my point was I don't think AI will reach the point of writing its own algorithmsThe term AI is in fact disingenuous. Working from a series of algorithms written hy biological entities cannot by definition, be artificial.

- Joined

- 19 July 2016

- Messages

- 3,728

- Reaction score

- 2,694

Whatever the moniker it wears, fear of skynet will curb actual AI apart from hypetheticals and whimsy. AI is just marketing speak for "It's stupid and costs too much" so give us your money, sheeples.

Recent articles

https://www.channelnewsasia.com/world/fight-over-dangerous-ideology-shaping-ai-debate-3727481

AI

medicalxpress.com

medicalxpress.com

phys.org

phys.org

techxplore.com

techxplore.com

phys.org

phys.org

techxplore.com

techxplore.com

techxplore.com

techxplore.com

techxplore.com

techxplore.com

phys.org

phys.org

techxplore.com

techxplore.com

phys.org

phys.org

techxplore.com

techxplore.com

techxplore.com

techxplore.com

techxplore.com

techxplore.com

https://www.channelnewsasia.com/world/fight-over-dangerous-ideology-shaping-ai-debate-3727481

AI

How do we decide what to look at next? A computational model has the answer

Imagine you are looking out the window: a small bird is flying across the blue sky, and a girl with a red baseball cap is walking along the sidewalk, passing by two people sitting on a bench. You might think that you are "just seeing" what is happening, but the truth is that to make sense of the...

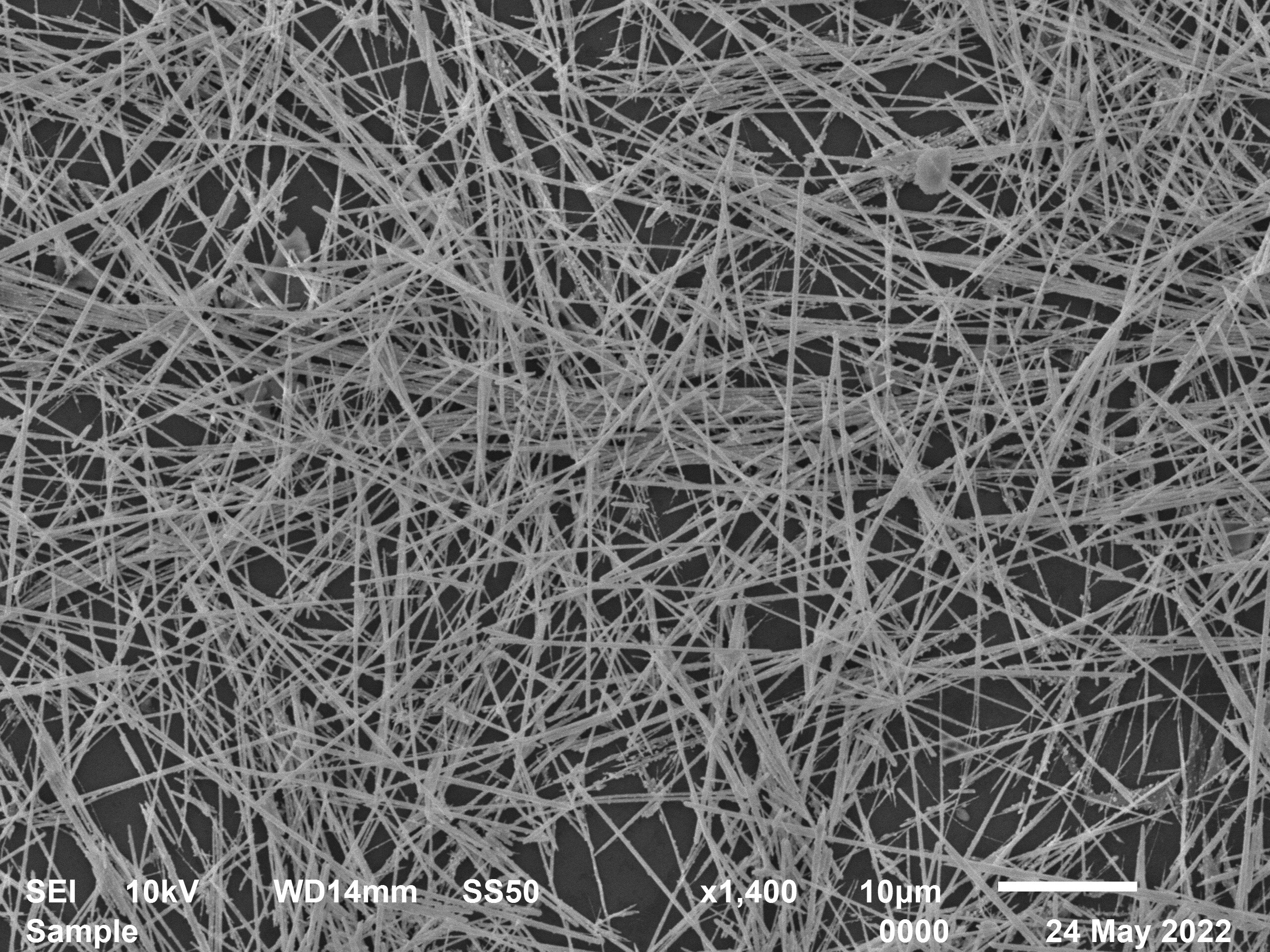

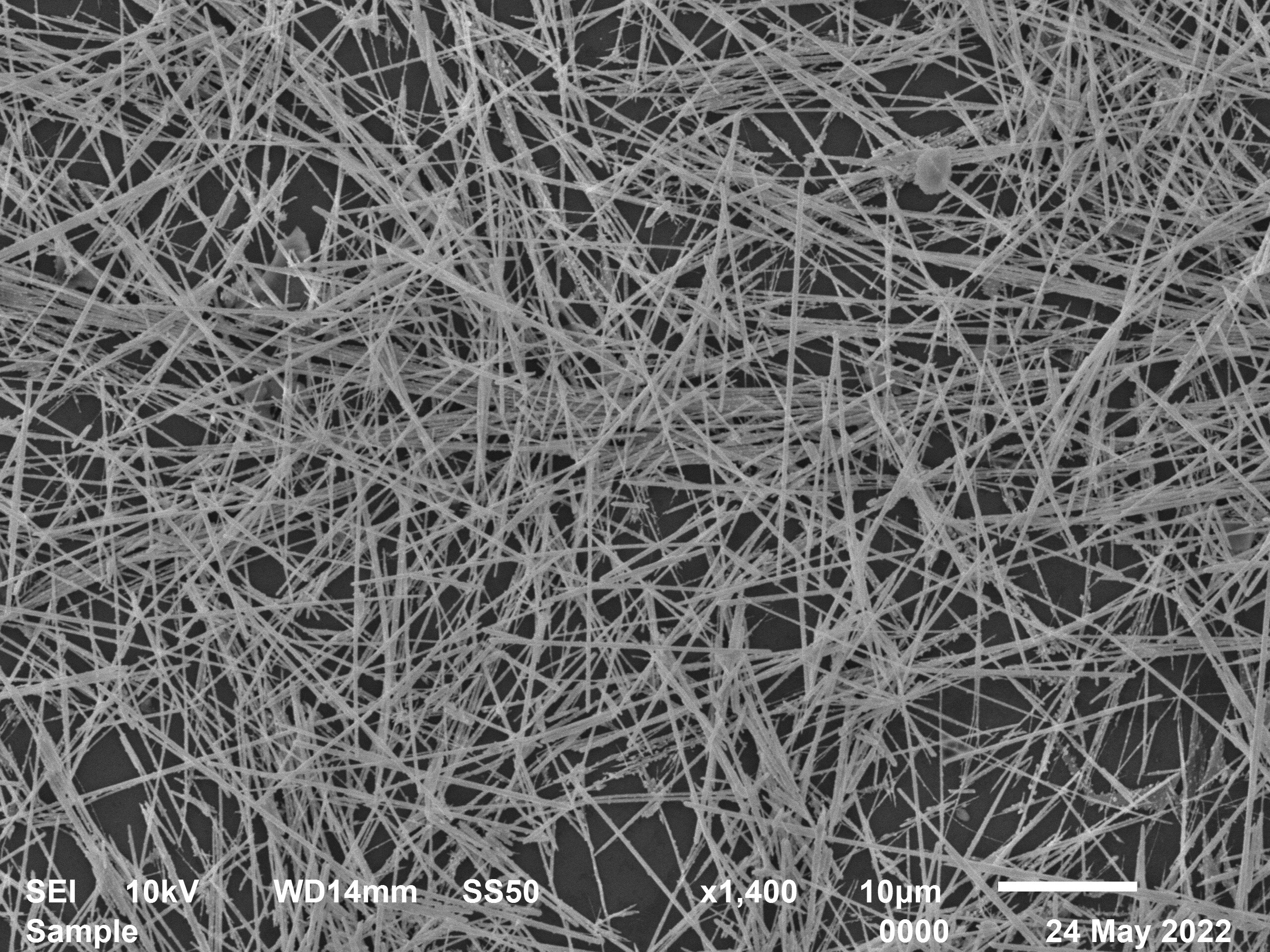

Nanowire 'brain' network learns and remembers 'on the fly'

For the first time, a physical neural network has successfully been shown to learn and remember "on the fly," in a way inspired by and similar to how the brain's neurons work.

Researchers use AI to make mobile networks more efficient

A new artificial intelligence (AI) model, developed by the University of Surrey, could help the UK's telecommunications network save up to 76% in network resources compared to the market's most robust Open Radio Access Network (O-RAN) system—and improve the environmental sustainability of mobile...

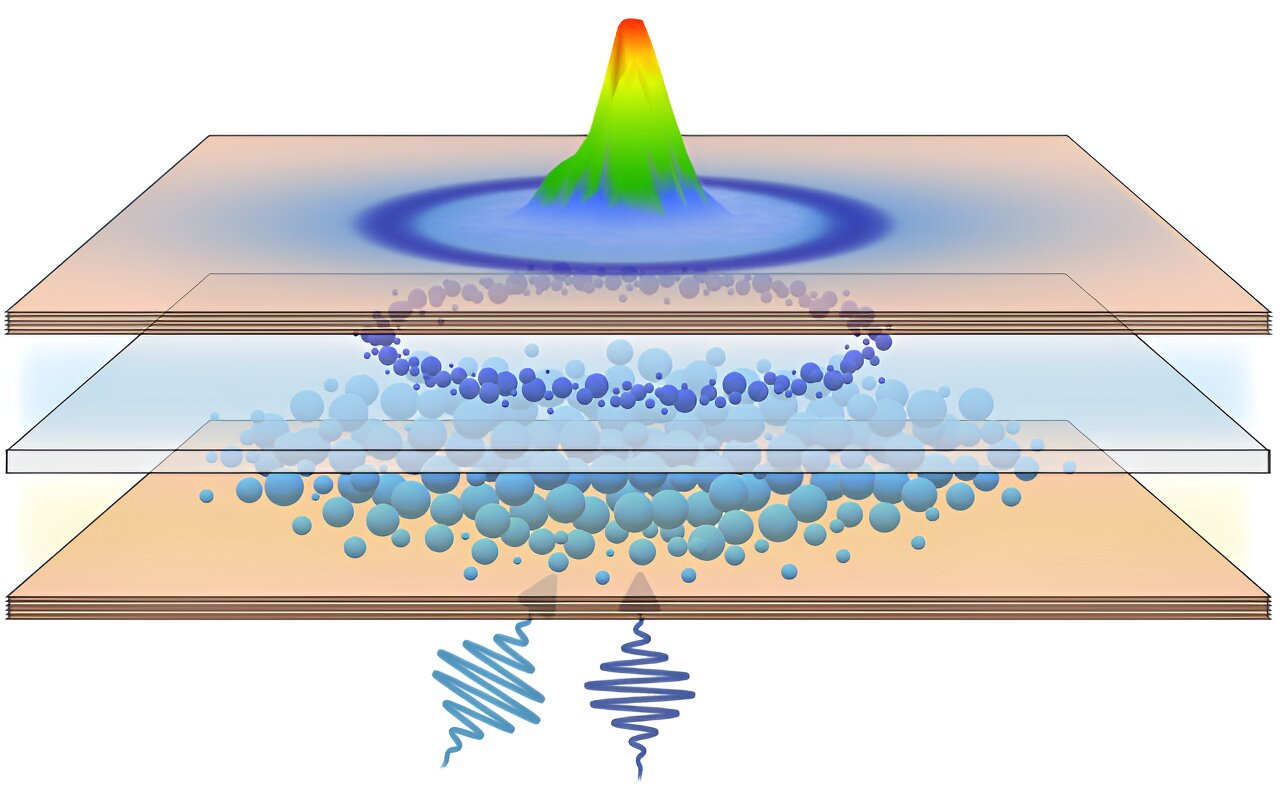

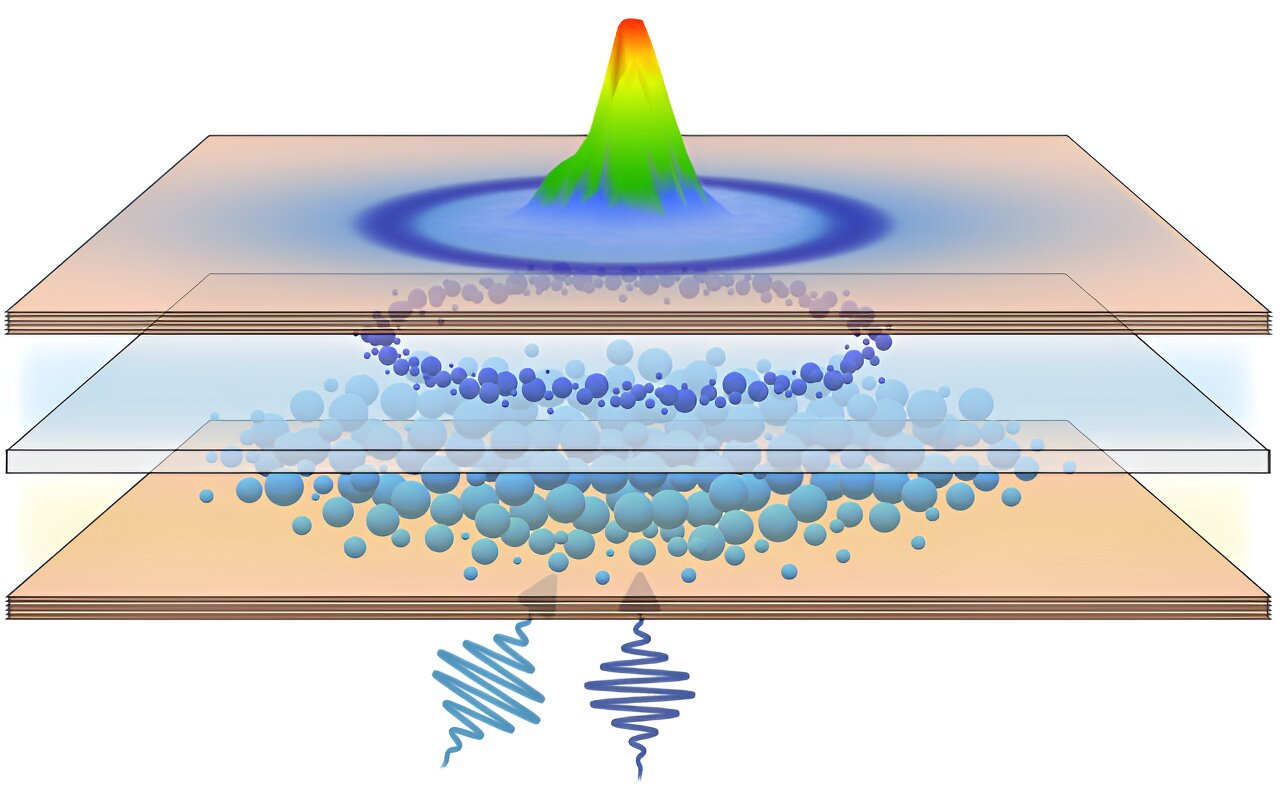

Scientists manipulate quantum fluids of light, bringing us closer to next-generation unconventional computing

In a quantum leap toward the future of unconventional computing technologies, a team of physicists made an advancement in spatial manipulation and energy control of room-temperature quantum fluids of light, aka polariton condensates, marking a pivotal milestone for the development of high-speed...

Learning to forget—a weapon in the arsenal against harmful AI

With the AI summit well underway, researchers are keen to raise the very real problem associated with the technology—teaching it how to forget.

The future of AI hardware: Scientists unveil all-analog photoelectronic chip

Researchers from Tsinghua University, China, have developed an all-analog photoelectronic chip that combines optical and electronic computing to achieve ultrafast and highly energy-efficient computer vision processing, surpassing digital processors.

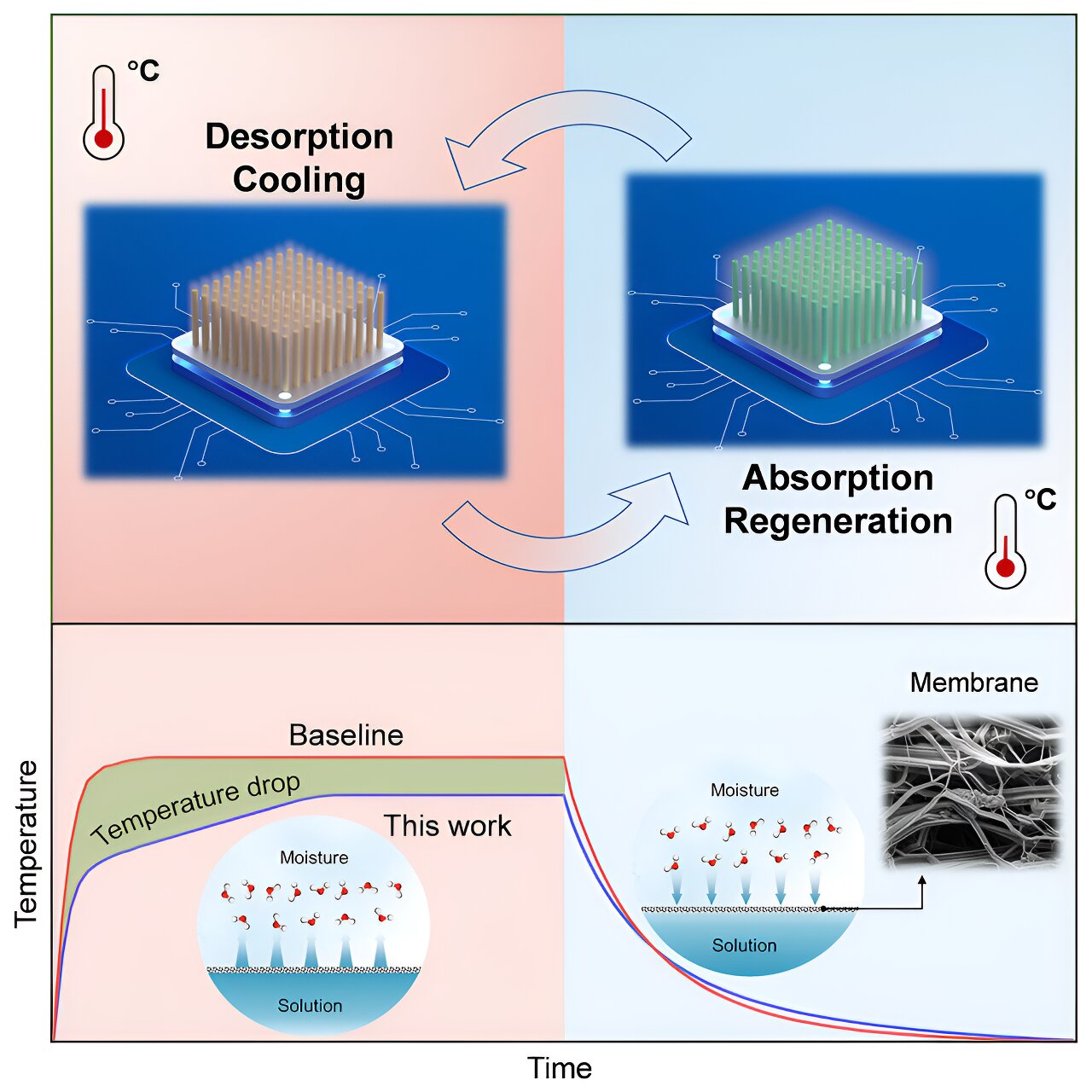

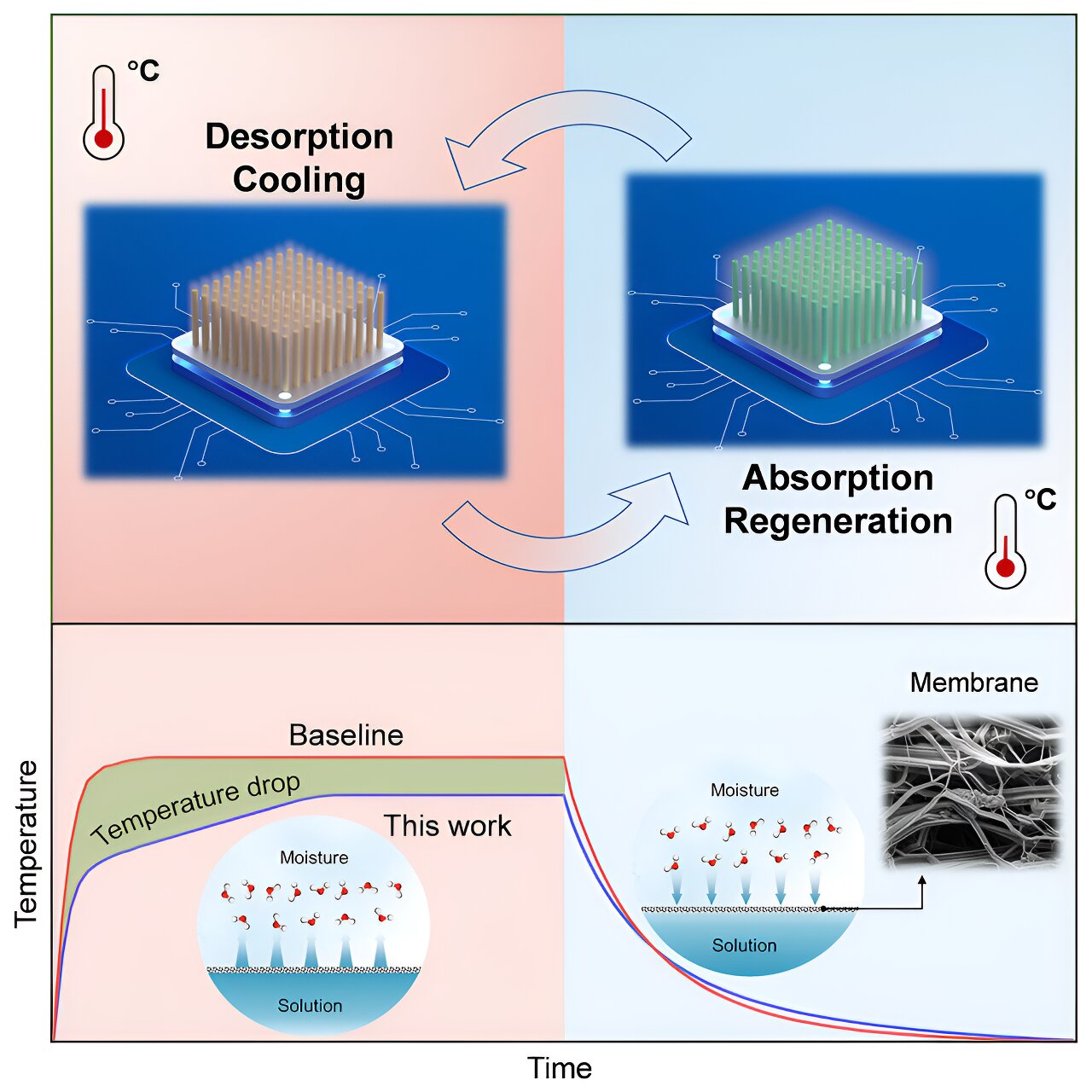

Salt solution cools computers, boosts performance

Researchers at the City University of Hong Kong found the secret to a more efficient, less expensive approach to keeping massive computer systems cool: Just add salt.

Processor made for AI speeds up genome assembly

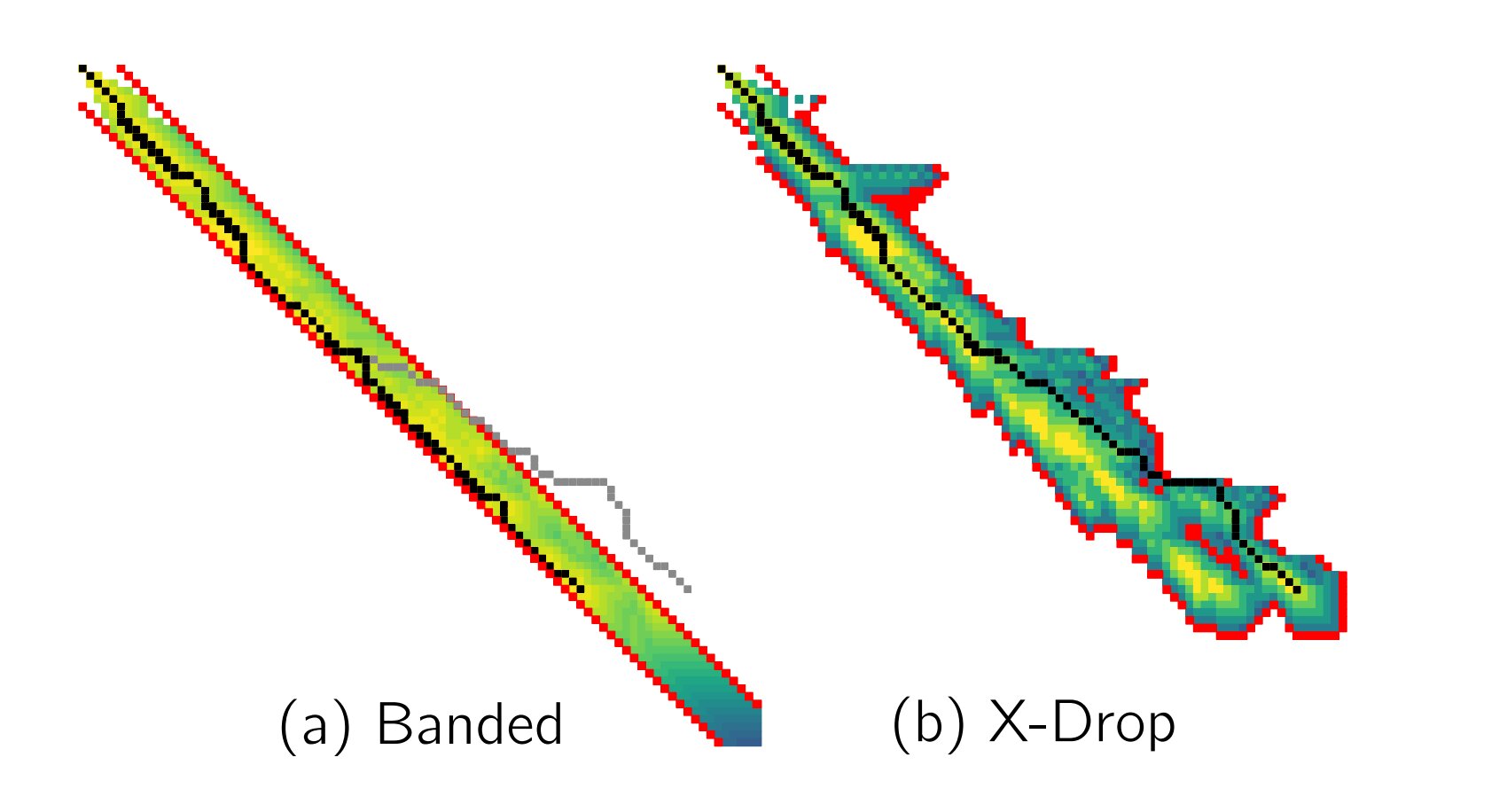

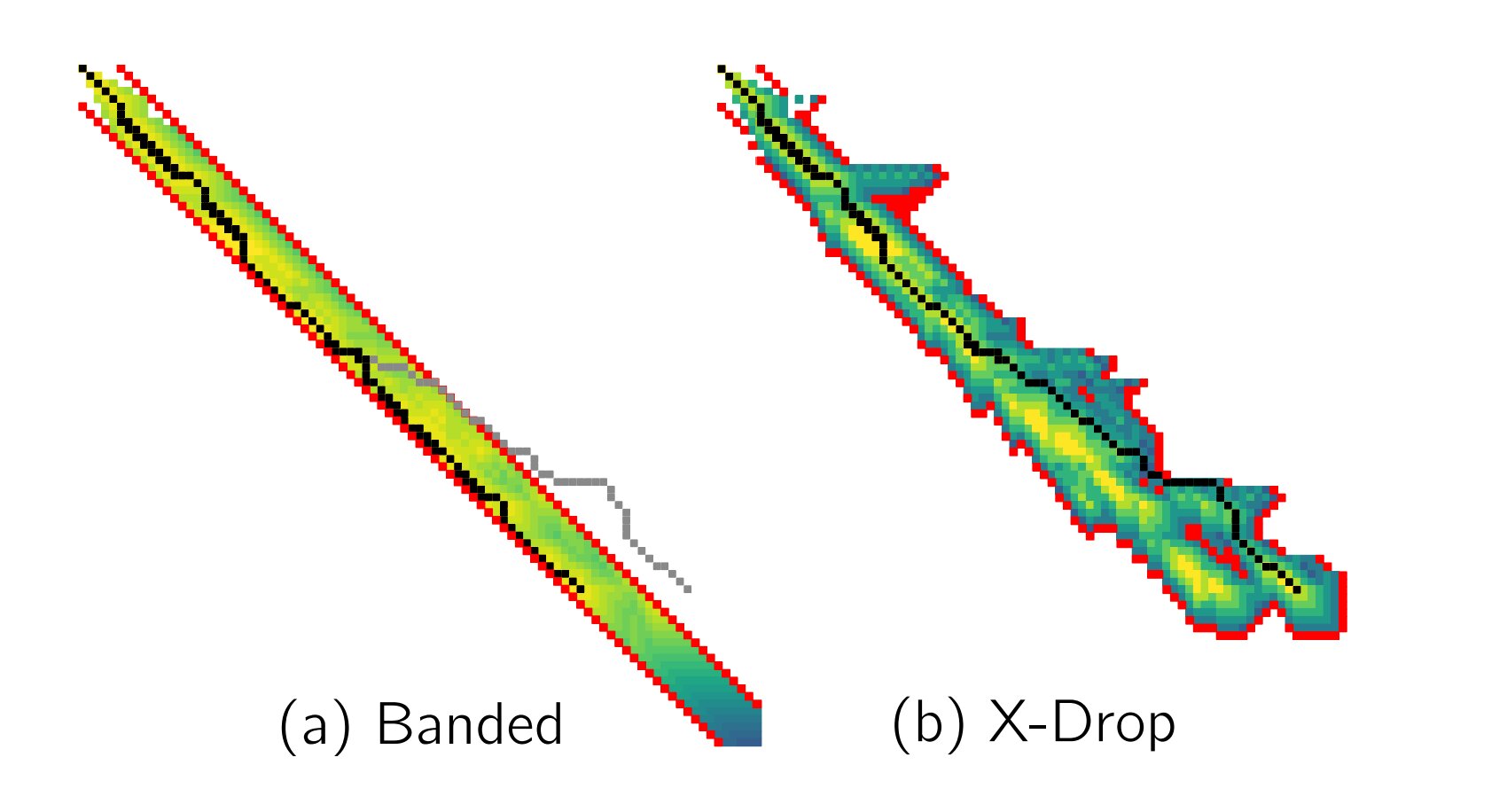

A hardware accelerator initially developed for artificial intelligence operations successfully speeds up the alignment of protein and DNA molecules, making the process up to 10 times faster than state-of-the-art methods.

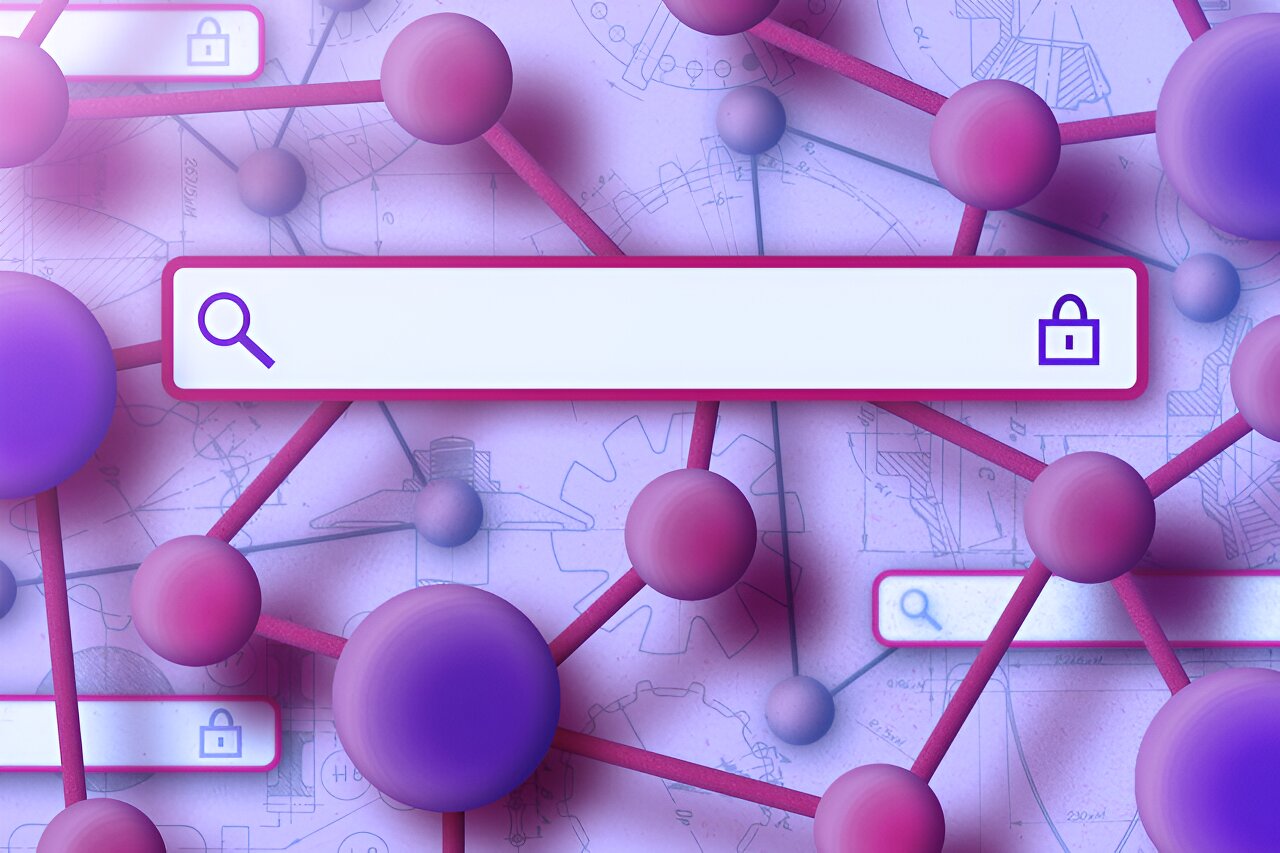

Accelerating AI tasks while preserving data security

With the proliferation of computationally intensive machine-learning applications, such as chatbots that perform real-time language translation, device manufacturers often incorporate specialized hardware components to rapidly move and process the massive amounts of data these systems demand.

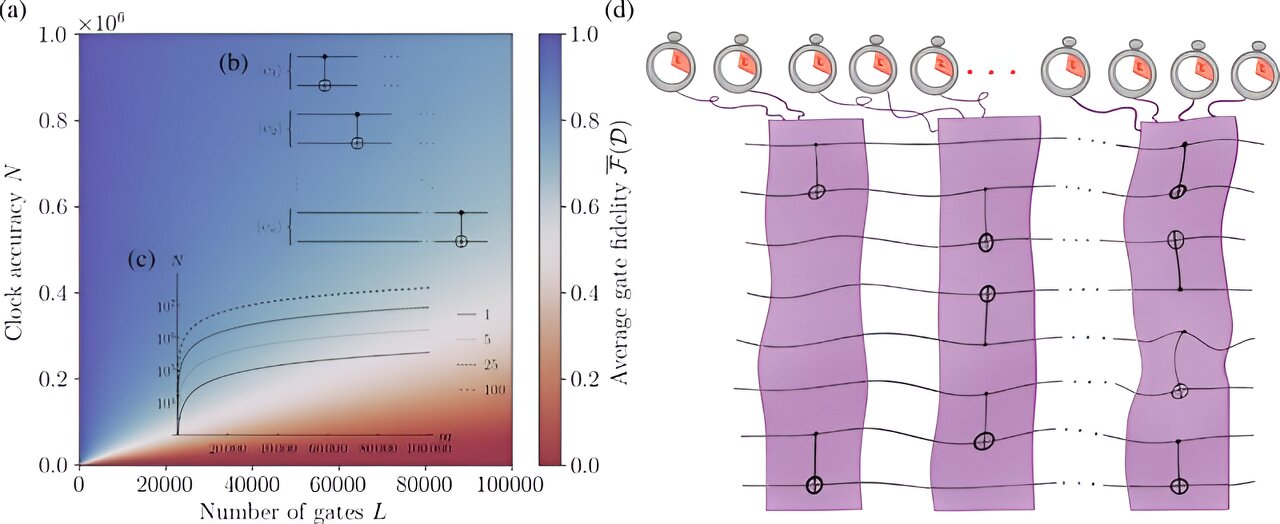

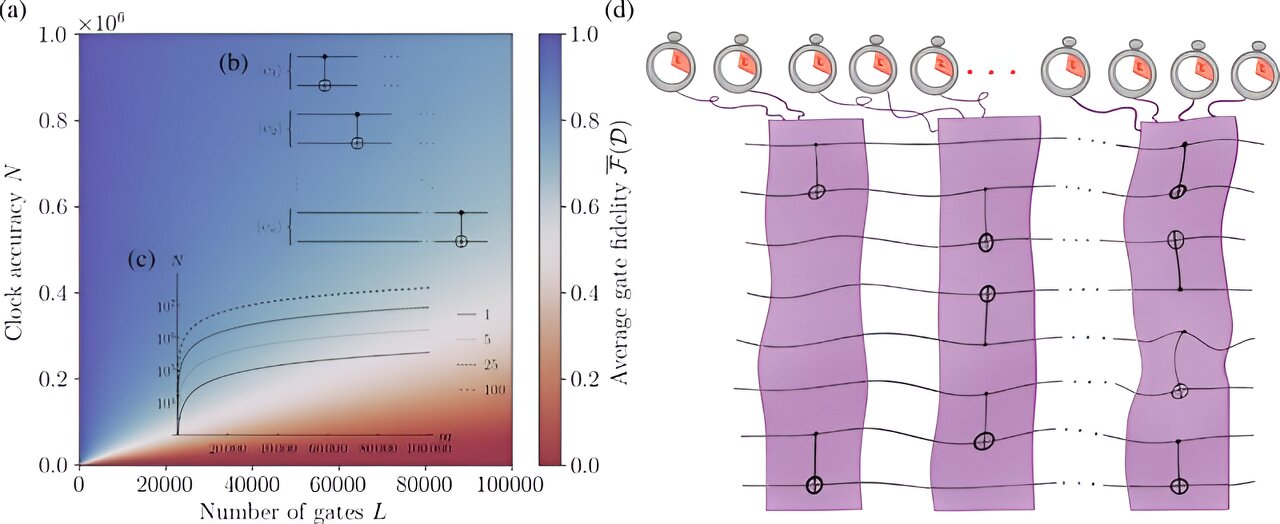

Late not great—imperfect timekeeping places significant limit on quantum computers

New research from a consortium of quantum physicists, led by Trinity College Dublin's Dr. Mark Mitchison, shows that imperfect timekeeping places a fundamental limit to quantum computers and their applications. The team claims that even tiny timing errors add up to place a significant impact on...

New techniques efficiently accelerate sparse tensors for massive AI models

Researchers from MIT and NVIDIA have developed two techniques that accelerate the processing of sparse tensors, a type of data structure that's used for high-performance computing tasks. The complementary techniques could result in significant improvements to the performance and...

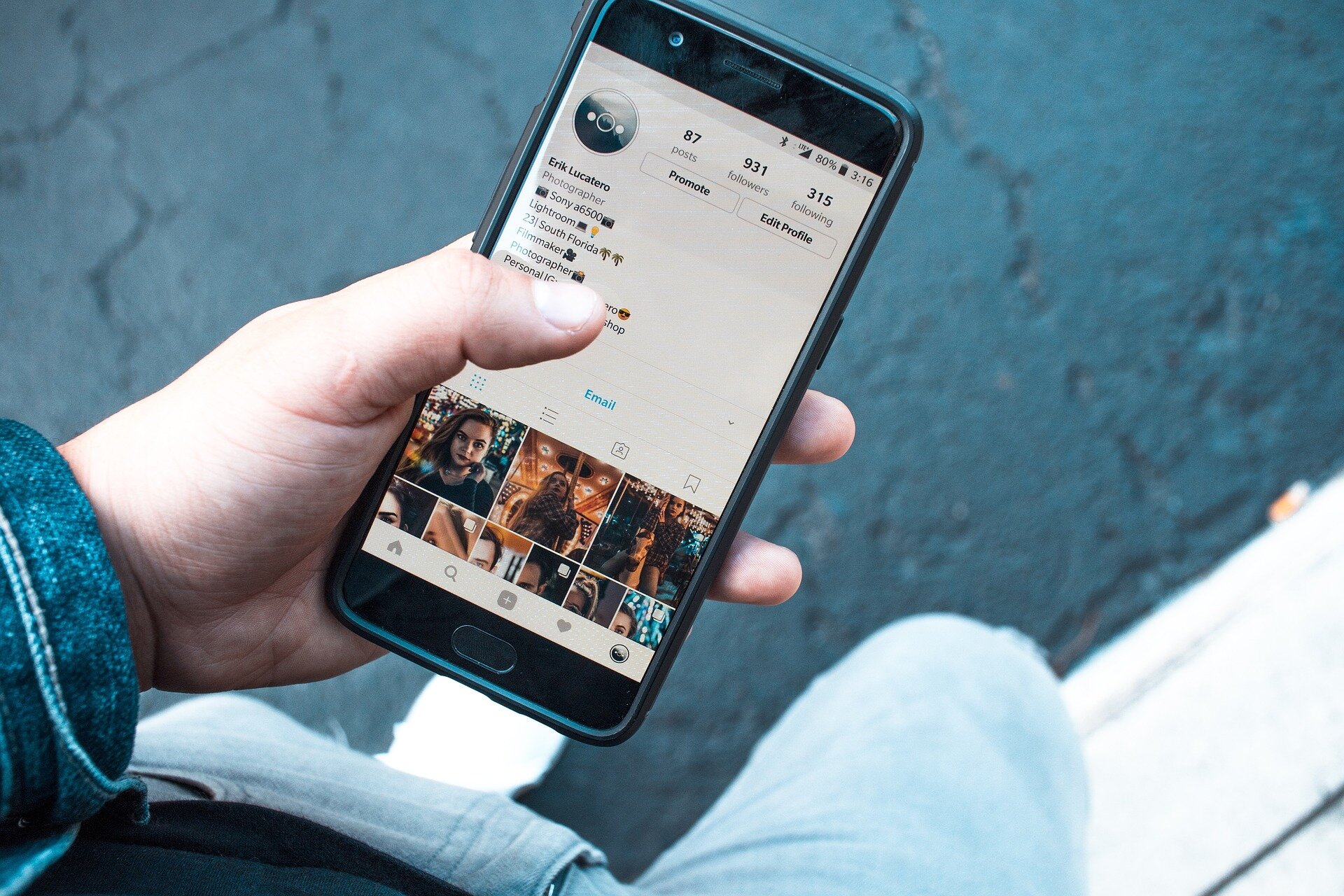

Study identifies human–AI interaction scenarios that lead to information cocoons

The widespread use of AI algorithms, particularly algorithms designed to recommend content and products to users based on their previous activity online, has led to the rise of new phenomena known as social media echo chambers and information cocoons. These phenomena pose limitations in the...

The brain may learn about the world the same way some computational models do

To make our way through the world, our brain must develop an intuitive understanding of the physical world around us, which we then use to interpret sensory information coming into the brain.

Last edited:

martinbayer

ACCESS: Top Secret

- Joined

- 6 January 2009

- Messages

- 2,374

- Reaction score

- 2,100

An illuminating experience: https://www.theguardian.com/books/2...e-elif-batuman?utm_source=pocket-newtab-en-us

Shades of HAL's passive-aggressiveness there, mixed with an unhealthy dose of misinformation/gaslighting...

Shades of HAL's passive-aggressiveness there, mixed with an unhealthy dose of misinformation/gaslighting...

Last edited:

Forest Green

ACCESS: Above Top Secret

- Joined

- 11 June 2019

- Messages

- 5,093

- Reaction score

- 6,674

What new federal cybersecurity policy means for government contractors

The most controversial section calls for holding software companies liable for producing insecure code.

Forest Green

ACCESS: Above Top Secret

- Joined

- 11 June 2019

- Messages

- 5,093

- Reaction score

- 6,674

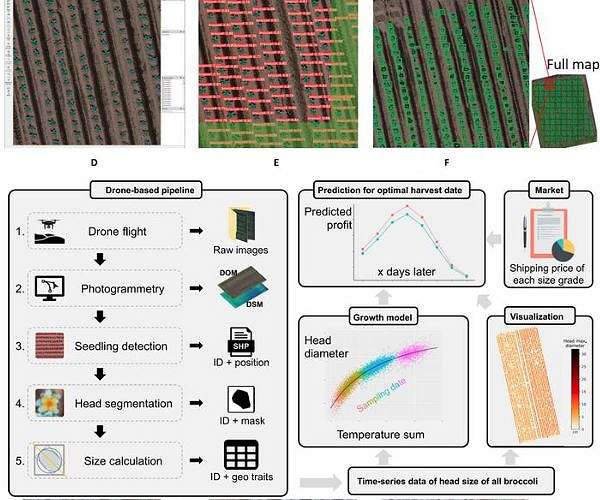

AI drones to help farmers optimize vegetable yields

Tokyo, Japan (SPX) Oct 04, 2023 - For reasons of food security and economic incentive, farmers continuously seek to maximize their marketable crop yields. As plants grow inconsistently, at the time of harvesting, there will inevitab

www.spacewar.com

- Joined

- 16 December 2010

- Messages

- 2,841

- Reaction score

- 2,084

An entire news service that seems to use AI instead of journalists

bnn.network

bnn.network

BNN Breaking - Headlines, World News

Breaking News Network (BNN) is an independent news network. We break the status-quo and offer a better approach to reporting and consuming the news.

bnn.network

bnn.network

Rhinocrates

ACCESS: Top Secret

- Joined

- 26 September 2006

- Messages

- 2,112

- Reaction score

- 4,509

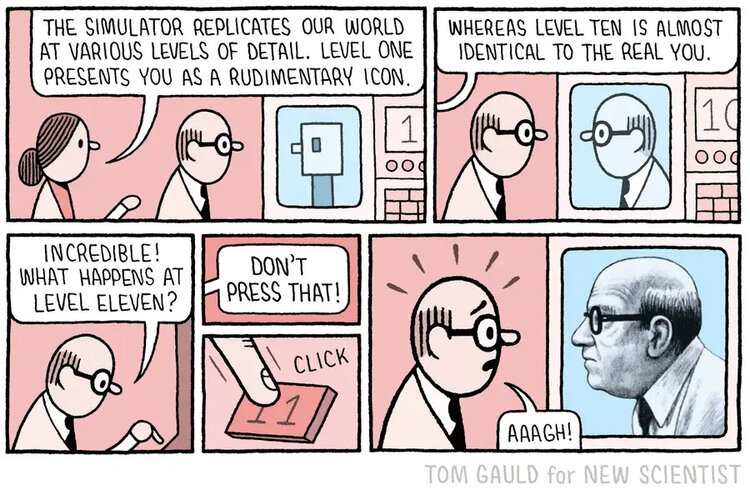

OK, not entirely serious. Sorry. Still, it raises an interesting question. As a tangent in his sf novel, The Omega Expedition, Brian Stableford notes that human senses are limited, and wondered what would happen if you had direct machine-brain interfaces and the imagery transmitted was at higher resolution that we see? No heads exploded as it's fairly hard sf and the brain is remarkably flexible, but he didn't go into much depth of speculation.

The image is humorous but makes a serious point. All rational people know that it's nuts for the government to forgive student loans en masse, especially for those who got degrees that they *knew* would not lead to careers that would pay enough to pay off the loans. But now AI is going to make a *lot* of fields non-lucrative, and it would be not just unwise but unethical to go into debt studying for a field that simply won't have any use for you.

I have had limited experience with AI on the internet. It seems that most systems in common use have pre-programmed guidelines that prevent discussion of some topics or biases that slant the discussion toward the programmers desired outcome. Since people with their own concepts of correctness set up the guidelines for AI, we are not really seeing the full potential of the systems. It would be interesting to see what an AI system would generate if it had no programmer-built constraints.

Rhinocrates

ACCESS: Top Secret

- Joined

- 26 September 2006

- Messages

- 2,112

- Reaction score

- 4,509

How Ukraine is using AI to fight Russia

From target hunting to catching sanctions-busters, its war is increasingly high-tech

A broad article with many fun facts. Major points:

...Open Minds Institute (OMI) in Kyiv, describes the work his research outfit did by generating these assessments with artificial intelligence (AI). Algorithms sifted through oceans of Russian social-media content and socioeconomic data on things ranging from alcohol consumption and population movements to online searches and consumer behaviour. The AI correlated any changes with the evolving sentiments of Russian “loyalists” and liberals over the potential plight of their country’s soldiers.

...drone designers commonly query ChatGPT as a “start point” for engineering ideas, like novel techniques for reducing vulnerability to Russian jamming. Another military use for ai, says the colonel, who requested anonymity, is to identify targets.

As soldiers and military bloggers have wisely become more careful with their posts, simple searches for any clues about the location of forces have become less fruitful. By ingesting reams of images and text, however, AI models can find potential clues, stitch them together and then surmise the likely location of a weapons system or a troop formation.

...uses the model to map areas where Russian forces are likely to be low on morale and supplies, which could make them a softer target. The AI finds clues in pictures, including those from drone footage, and from soldiers bellyaching on social media.

The use of AI helps Ukraine’s spycatchers identify people ,,, “prone to betrayal”.

Palantir’s software and Delta, battlefield software that supports the Ukrainian army’s manoeuvre decisions. COTA's [Operations for Threats Assessment] “bigger picture” output provides senior officials with guidance on sensitive matters, including mobilisation policy

Ukraine’s ai effort benefits from its society’s broad willingness to contribute data for the war effort. Citizens upload geotagged photos potentially relevant for the country’s defence into a government app called Diia (Ukrainian for “action”).

Ukraine’s biggest successes came early in the war when decentralised networks of small units were encouraged to improvise. Today, Ukraine’s ai “constructor process”, he argues, is centralising decision-making, snuffing out creative sparks “at the edges”. His assessment is open to debate. But it underscores the importance of human judgment in how any technology is used.

And so it begins. AI used to generate a fake recording of someone with the intended purpose of ruining their career/life. Fortunately it was caught, but soon this sort of thing will be undetectable.

View: https://twitter.com/rawsalerts/status/1783856169049543020

View: https://twitter.com/rawsalerts/status/1783856169049543020

Similar threads

-

-

AN ASSESSMENT OF REMOTELY OPERATED VEHICLES TO SUPPORT THE AEAS PROGRAM...

- Started by Grey Havoc

- Replies: 0

-

Davos Doom-mongers herald a new dark age for climate science

- Started by Grey Havoc

- Replies: 10

-

Russian crackdown on the dissemination of space information

- Started by greenmartian2017

- Replies: 8

-