The exhaust energy from the exhaust stacks isn't actually wasted though, it provides a significant amount of thrust compared to the propellor, especially at high altitude and high speed. I seem to remember a figure of ~30mph boost.

I came across a nice comparison between turbocharged and supercharged engines many years ago. Turbocharged better at low speed and altitude. Supercharged being superior above ~400mph above 20,000ft i seem to remember. But not a massive difference.

I believe that your first point is correct in some cases but not in others. Like most technical issues, advantages--and performance--are relative to conditions and requirements. Thrust-producing exhaust stacks demand a lot of experimental tuning work to get them right. When they aren't right, they might even hurt performance, due to back pressure or poor scavenging. In the case of the Merlin, it took awhile before the right solution was found. Ejector stacks also raise operational concerns--they are problematic for military aircraft operating at night.

In your second point, I think you have mixed up the conditions. Supercharged (gear- or turbine-driven) will be superior at altitude. Unsupercharged or mildly supercharged engines will be superior at low level (hence the cropped supercharger impellers used when adapting Merlin engines for low level use).

With few exceptions, military and commercial aero engines never make more power than they do at sea level. Maximum power is usually needed when getting a loaded aircraft off the ground. The engine will not need more as it climbs and cruises, because lift will increase with speed, fuel burn will reduce weight, and drag will be less in less dense air. So, if maximum power output were the issue (as it would be in race cars and Schneider Trophy racers), there would be little point in having a supercharger. A gear or turbine-driven driven supercharger would just add weight and complexity. You can always get the power with a larger, lighter, and/or faster-turning engine.

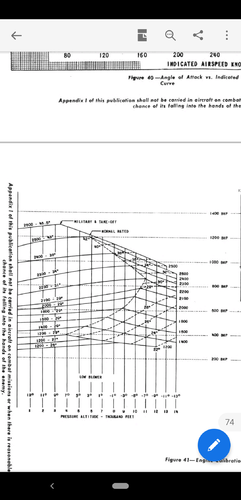

But maximum power output is, of course, not the only issue in an working airplane. As the airplane climbs, the air density decreases and power/thrust decreases with it. There is less oxygen per intake stroke, less fuel burned per expansion stroke, and less high-pressure, high-velocity gas in the exhaust. So an engine's

critical altitude--the altitude at which sea-level power begins to fall off due to decreasing air pressure--is what matters in practical (non-racing) applications. Superchargers (mechanical or turbo) are used to

delay the point at which sea-level power falls off by artificially increasing the density of the intake air. As altitude increases. both have to do more work. But the power required for that work comes from different sources that have very different characteristics, costs, and benefits--and it is in this respect that mechanical and turbo superchargers have their respective advantages and disadvantages.

During the war, in England, the mechanical supercharger had the advantage of being very highly developed (largely due to Bristol's early lead in air-cooled engines and Rolls-Royce's pre-war investments and racing experience--Napier did not benefit). The mechanical approach was expensive and demanded a lot of development. The supercharger was driven by precision-machined gears and clutches and the whole installation was integral with and dedicated to a given engine design and model. Superchargers weren't interchangeable, mass-production units and couldn't be added to any desired engine (though Allison worked on some designs with this in mind). Mechanical superchargers were also efficient only within a fairly narrow altitude band. In general, to achieve a higher critical altitude, engineers had to redesign and/or add hardware. Rolls-Royce were the masters at addressing this. But the solutions--multi-speed drives, multi-stage impellers, and intercoolers, added weight and complexity. The parts had to be finely tuned and governed so that abrupt speed changes did not catastrophically damage components. The extra parts increased the power consumption of the supercharger and reduced the net gains. Complexity and weight yield diminishing returns. This is probably why two-stage engines were successful, but, with the exception of German diesels, three-stage engines were not. As several of us have rightly pointed out, tuned exhaust ejectors add thrust that can offset the power consumption of the supercharger, but only in the supercharger's designed operating range. At other altitudes, the supercharger will consume power without producing optimal power or exhaust thrust.

Turbochargers had the disadvantage of a relative lack of development prior to the war, largely due to fuel and metallurgical issues (see https://history.nasa.gov/SP-4306/ch3.htm, S.D heron's autobiography, and S.D Heron's

Development of Aviation Fuels). During the war, this, arguably, limited its success vs. the mechanical supercharger. But post-war, turbochargers replaced their mechanical counterparts in almost all applications, from light airplanes to airliners. The turbo charger is relatively simple compared to a mechanical unit, because it lacks most of the multi-speed clutching and gearing. The units were mass produced in various sizes that could be more or less bolted on to a variety of of production engines. Performance-wise, the turbo charger had the huge advantage of automatically producing higher supercharger speed--and thus higher pressure--as altitude increased, up to the critical altitude. The compressor/impeller had to turn faster to produce sea level power, as in the case of the gear-driven unit. But the turbine had to overcome less back pressure and thus automatically spun the compressor faster. No gear trains. No clutches. No complex mechanical governors. A simple (if sometimes troublesome) blow-off valve prevented compressor over-speed/over-pressure problems by venting excess exhaust gas to the atmosphere. This was less efficient than a properly tuned ejector stack below critical altitude, but the inefficiency would be largely offset by the the lack of mechanical losses under these conditions. On the other hand, turbochargers did compress the intake air in close proximity to the exhaust, and components could get very hot. All war-time turbocharger installations suffered from fires, detonation, and overheating, a problem that was kept in bounds mainly by the abundant use of Anti Detonant Injection (ADI, water injection with alcohol as antifreeze) during take off and landing.

Overall, I suspect that the lower costs that result from suitability for mass production and interchangeability of parts explain why the USAAF preferred the turbo and why it replaced the mechanical supercharger in the post-war civil markets. When the performance differences aren't that great in theory and when cost and availability are critical, an off-the-shelf unit almost always wins over a multi-year bespoke engineering effort. In 1939, England had already completed that effort. So following through on the Merlin made sense. But it was a dead end (aside, of course, from all the expertise RR gained on the developing the compressor itself, which put them in the forefront of jet development, when coupled with a gas-turbine drive).

I said that there were few exceptions to the rule that military and commercial aero engines never make more power than they do at sea-level. The exceptions are the Austrian and German "super-compressed" engines of the first world war. These had compression ratios optimized for the intended operating altitude of the aircraft. As I understand them, a decompression lever on the camshaft let a ground crewman lower the compression enough to swing the prop and start the motor. The lever was then returned to the full compression position. From sea-level to the optimal altitude, the pilot had to operate the engine at part throttle--and low power--to avoid over stressing it. This was effective and met the immediate need, but was inflexible, inefficient, and unlikely to be good for durability.